Linux: 容器编排Mesos

TAGS: Dim

弃用

架构简介

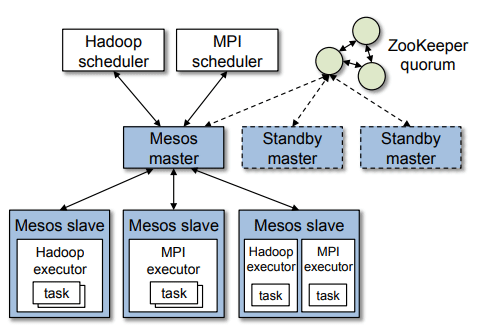

mesos架构

Mesos框架图

从上图可知,Mesos由一个管理在每个集群节点上运行的slave进程的master守护进程和在这些slave上运行任务的Mesos框架(framworks)组成。

通过提供资源,master可以通过frameworks实现细粒度的资源共享(CPU,RAM,…)。

在Mesos的框架中存在如下基本概念:

- Mesos-matser: 协调全部的slave,并确定每个节点的可用资源,聚合计算跨节点的所有可用资源的报告,然后向注册到Master的Framework发出资源邀约。

- Mesos-slave:向Master汇报自己的空闲资源和任务的状态,负责管理本节点上的各个mesos-task,在framework成功向Master申请资源后,收到消息的slave会启动相应framework的executor。

- Framework:Hadoop,Spark等,通过MesosSchedulerDriver接入Mesos。Framework由两部分组成,调度器(Scheduler)和执行器(Executor)。Scheduler在master上注册,获取提供的资源,Executor进程在slave节点上启动,运行framework的任务。

Executor:执行器,用于启动计算框架中的task。

在框架图中framework有两种,一种是Hadoop;一种是MPI。从框架图中可以看出, Mesos是一个master/slave结构,其中master根据调度策略(比如公平调度和优先 级方式),来决定将slave节点上的空闲资源(比如:CPU、内存或磁盘等)的提供 给framwork使用。另外Mater会保存framework和mesos slave的一些状态,这些 状态可用通过framework和slave重新注册来进行重构,因此可用使用zookeeper 来解决mesos matser单点故障的问题。slave主要功能是向Mater汇报任务状态和 启动framwork的executor。

Mesos业务逻辑流程

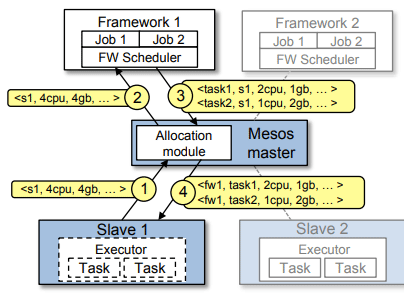

Mesos的框架图的逻辑流程分为如下四步:

- 第一步,Slave1节点向master报告它有空闲资源4个CPU和4GB内存。

- 第二步,Master发送一个Resource Offer给Framework1来描述Slave1有多少可用资源。

- 第三步,FrameWork1中的FW Sheduler会答复Master,有两个task需要运行在Slave1,一个Task需要< 2个cpu,1gb内存>,另一个Task需要< 1个cpu,2gb内存>

- 第四步,Master将任务需求资源发送给Slave1,Slave1分配适当的资源给Framework1的Executor,然后executor开始执行这两个任务,因为Slave1还剩< 1CPU,1gb内存>的资源未分配,分配模块还可用将这些资源提供给Framwork2来使用。

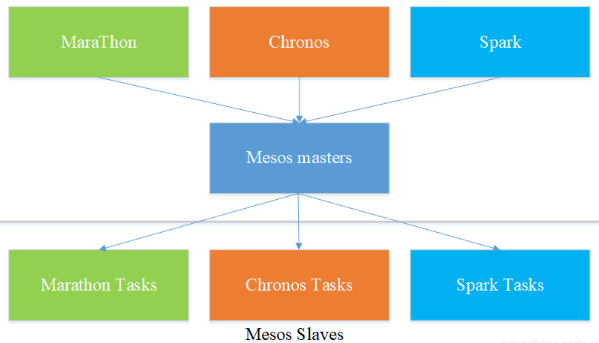

marathon 简介

Marathon是基于MesosFramework的开源框架,框架图可参考如下。Marathon可以指定每个应用程序需要的资源以及要运行此程序需要多少实例。它可以使用的集群资源对失败的任务自动做出响应。

如果某个Mesos Slave宕机或应用的某个实例退出、失败,Marathon将会自动启动一个新的实例,来替换掉失败的实例。Marathon也允许用户在部署时指定应用程序彼此间的依赖关系,这样就可以保证某个应用程序实例不会在它依赖的数据库实例启动前启动。

Marathon提供对外的API接口函数,比如:

#1.需要通过Marathon启动容器 curl -X POST -H "Accept: application/json" -H "Content-Type: application/json" \ localhost:8080/v2/apps -d '{ "container": {"image": "docker:///libmesos/ubuntu", "options": ["--privileged"]}, "cpus": 0.5, "cmd": "sleep 500", "id": "docker-tester", "instances": 1, "mem": 300 }' #2.通过Marathon Rest API检查启动任务的状态 curl -X GET -H "Content-Type: application/json" localhost:8080/v2/apps

集群部署

参考:

系统要求:

64位Linux操作系统 kernel>=3.10 jdk必须是1.8+版本

关闭虚拟机防火墙

setenforce 0 systemctl stop firewalld.service

虚拟机的hostname及hosts文件都需做修改

hostnamectl set-hostname master01 #分别修改虚拟机的主机名,一一对应,重启生效 cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.200.46.198 master01 zookeeper01 slave01 xu-1 my.registry.cici.me 10.200.46.199 master02 zookeeper02 slave02 xu-2 10.200.46.200 master03 zookeeper03 xu-3

注意:主机名一定要在hosts文件中

marathon在这里部署在所有mesos master节点上

编译mesos (略)

看官网,需要注意的是如果网络不通,编译的时候需要设置maven的代理

下面以yum 方式安装

所有节点yum源

#添加阿里云yum wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo sed -i '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo #安装mesos的yum源 rpm -Uvh http://repos.mesosphere.io/el/7/noarch/RPMS/mesosphere-el-repo-7-1.noarch.rpm rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-mesosphere

zookeeper节点

安装ZooKeeper

# $yum -y install mesosphere-zookeeper yum install java-1.8.0-openjdk -y wget https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.4.11/zookeeper-3.4.11.tar.gz tar xf zookeeper-3.4.11.tar.gz -C /usr/local/ ln -sv /usr/local/zookeeper-3.4.11 /usr/local/zookeeper cd /usr/local/zookeeper mkdir -p /var/lib/zookeeper #编辑zookeeper配置文件 cat > /usr/local/zookeeper/conf/zoo.cfg << EOF maxClientCnxns=100 tickTime=2000 dataDir=/var/lib/zookeeper clientPort=2181 initLimit=10 syncLimit=5 #第一个端口2888 用来集群成员的信息交换,只有一个master会监听这个端口 #第二个端口3888 是在leader挂掉时专门用来进行选举leader所用 server.1=zookeeper01:2888:3888 server.2=zookeeper02:2888:3888 server.3=zookeeper03:2888:3888 EOF #配置zookeeper的id,写入一个1-255的值,每个zookeeper节点的id不要重复 master01 echo 1 >/var/lib/zookeeper/myid master02 echo 2 >/var/lib/zookeeper/myid master03 echo 3 >/var/lib/zookeeper/myid # 环境变量 cat >/etc/profile.d/zookeeper.sh <<\EOF ZOOKEEPER=/usr/local/zookeeper PATH=$PATH:$ZOOKEEPER/bin EOF # 启动脚本 cat >/usr/lib/systemd/system/zookeeper.service<<\EOF [Unit] Description=Apache ZooKeeper After=network.target [Service] Environment="ZOOCFGDIR=/usr/local/zookeeper/conf" SyslogIdentifier=zookeeper WorkingDirectory=/usr/local/zookeeper ExecStart=/usr/local/zookeeper/bin/zkServer.sh start-foreground Restart=on-failure RestartSec=20 User=root Group=root [Install] WantedBy=multi-user.target EOF # 启动zk systemctl start zookeeper

mesos-master服务

# 安装mesos marathon yum install mesos -y # 配置 #配置mesos-master的--zk参数 echo "zk://10.200.46.198:2181,10.200.46.199:2181,10.200.46.200:2181/mesos" >/etc/mesos/zk #配置mesos-master的--hostname参数 host_ip=$(/usr/sbin/ifconfig eth0 | sed -n '/inet /p'| awk '{print $2}') host_name=$(sed -rn "/$(/usr/sbin/ifconfig eth0 | sed -n '/inet /p'| awk '{print $2}')/s@[[:digit:]\.]+[[:space:]]+([[:alnum:]_-]+).*@\1@gp" /etc/hosts) echo $host_ip >/etc/mesos-master/hostname echo $host_ip >/etc/mesos-master/ip #配置mesos-master的--quorum值,这个值要大于master数量/2,这里是3个master所以该值设置为2 mesos_num=$(($(grep -c $(echo $host_name |sed -r 's@([[:alpha:]]+).*@\1@g') /etc/hosts)/2+1)) echo $mesos_num > /etc/mesos-master/quorum #=========mesos ACL echo '/etc/mesospasswd' > /etc/mesos-master/credentials echo '/etc/acls.json' > /etc/mesos-master/acls cat << EOF > /etc/mesospasswd marathon marathon EOF cat << EOF > /etc/acls.json { "register_frameworks": [ { "principals": { "values": ["marathon"] }, "roles": { "values": ["marathon"] } } ] } EOF #========= # 禁用掉 mesos-slave, 因为master和slave不能运行在同一台服务器 systemctl stop mesos-slave.service systemctl disable mesos-slave.service #启动mesos-master systemctl start mesos-master

因为master和slave不能同时运行于同一台物理主机上,所以为了节省主机资源,可以考虑在docker中运行mesos-master

docker run -itd -v /etc/mesos:/etc/mesos -v /etc/mesos-master:/etc/mesos-master \ --name mesosmaster --network host --restart=always \ 10.0.209.130:5000/mymesos-master:1.4.1 /root/run.sh

/root/run.sh 的内容是

/usr/bin/mesos-init-wrapper master

如果mesos-master的日志中一直出现 Replica in VOTING status received …

这表示出了一些问题, 这表示你之前使用过相同的 work_dir 运行过这个 master,这个文件夹内有一些持久化状态, 然后master会因为 quorum 达不到要求( 因为没有足够 quorum数量 的 master 有相同的持久化状态) 而拒绝启动以避免风险。 清空所有的work_dir即可。 echo "rm -rf $(cat /etc/mesos-master/work_dir)/*"

marathon节点

1)安装marathon

yum install marathon -y

2)配置marathon

#======1.7版本============= # 您不能同时指定环境变量和实际命令行参数相同的命令行参数 # 环境变量格式:MARATHON_+大写的命令行参数 # The core functionality flags can be also set by environment variable MARATHON_ + the option name in all caps. For example, MARATHON_MASTER for the --master option. #For boolean values, set the environment variable with empty value. For example, use MARATHON_HA= to enable --ha or MARATHON_DISABLE_HA= for --disable_ha. mkdir -p /etc/default mkdir -p /etc/marathon/ host_ip=$(/usr/sbin/ifconfig eth0 | sed -n '/inet /p'| awk '{print $2}') cat >> /etc/default/marathon << EOF #------------------------------------ # 配置marathon的--master参数 MARATHON_MASTER="zk://10.200.46.198:2181,10.200.46.199:2181,10.200.46.200:2181/mesos" # 配置marathon的--hostname参数 MARATHON_HOSTNAME="$host_ip" # 配置marathon的启动参数,这个值表示等待10分钟后,task如果还没有进入到TASK_RUNNING状态就被KILL掉,单位毫秒,默认值300000(5分钟)。 MARATHON_TASK_LAUNCH_TIMEOUT="600000" # 配置marathon的--http_port参数(可选),默认8080 MARATHON_HTTP_PORT="8080" # 高可用 MARATHON_ZK="zk://10.200.46.198:2181,10.200.46.199:2181,10.200.46.200:2181/mesos" # HTTP 登陆验证 MARATHON_HTTP_CREDENTIALS="wowotuan:wowotuan" # SSL 登陆 MARATHON_HTTPS_PORT="8443" MARATHON_SSL_KEYSTORE_PASSWORD="marathonwowopass" MARATHON_SSL_KEYSTORE_PATH="/etc/marathon/marathon.jks" #=================marathon Framework 认证 MARATHON_MESOS_AUTHENTICATION_PRINCIPAL="marathon" MARATHON_MESOS_AUTHENTICATION_SECRET_FILE="/etc/marathonpasswd" MARATHON_MESOS_ROLE="marathon" #=================其它参数 # --task_lost_expunge_initial_delay 默认5分钟,单位毫秒。在Marathon开始定期执行任务清除gc操作之前 MARATHON_TASK_LOST_EXPUNGE_INITIAL_DELAY="120000" # --task_lost_expunge_interval 默认30秒,单位毫秒。对于丢失的任务gc操作 MARATHON_TASK_LOST_EXPUNGE_INTERVAL="300000" #================= EOF cat << EOF > /etc/mesospasswd marathon marathon EOF #=======1.7版本============== #=======配置文件方式1.4版本============== mkdir -p /etc/marathon/conf # 启用marathon订阅功能,1.4版本特性 echo "http_callback" > /etc/marathon/conf/event_subscriber host_ip=$(/usr/sbin/ifconfig eth0 | sed -n '/inet /p'| awk '{print $2}') echo "$host_ip" > /etc/marathon/conf/hostname #echo "http://172.16.49.21:8000/api/services" > /etc/marathon/conf/http_endpoints # 开发测试用1.4版本特性 echo "zk://10.200.46.198:2181,10.200.46.199:2181,10.200.46.200:2181/mesos" > /etc/marathon/conf/master # 高可用 echo "zk://10.200.46.198:2181,10.200.46.199:2181,10.200.46.200:2181/mesos" > /etc/marathon/conf/zk # HTTP 登陆验证 echo "wowotuan:wowotuan" >/etc/marathon/conf/http_credentials # SSL 登陆 echo "8443" >/etc/marathon/conf/https_port echo "marathonwowopass" > /etc/marathon/conf/ssl_keystore_password echo "/etc/marathon/marathon.jks" >/etc/marathon/conf/ssl_keystore_path #=================marathon Framework 认证 echo 'marathon' > /etc/marathon/conf/mesos_authentication_principal echo '/etc/marathonpasswd' > /etc/marathon/conf/mesos_authentication_secret_file echo 'marathon' > /etc/marathon/conf/mesos_role cat << EOF > /etc/mesospasswd marathon marathon EOF #=================其它参数 # --task_lost_expunge_gc 默认75秒,单位毫秒。直到丢失的任务被垃圾收集并从任务跟踪器和任务存储库中清除。1.4版本后弃用 echo '180000' > /etc/marathon/conf/task_lost_expunge_gc # --task_lost_expunge_initial_delay 默认5分钟,单位毫秒。在Marathon开始定期执行任务清除gc操作之前 echo '120000' > /etc/marathon/conf/task_lost_expunge_initial_delay # --task_lost_expunge_interval 默认30秒,单位毫秒。对于丢失的任务gc操作 echo '300000' > /etc/marathon/conf/task_lost_expunge_interval #=======配置文件方式1.4版本==============

3)marathon 证书配置

export MARATHON_KEY_PASSWORD=marathonwowopass export MARATHON_PKCS_PASSWORD=marathonwowopass export MARATHON_JKS_PASSWORD=marathonwowopass COUNTRY=CN PROVINCE=BEIJING CITY=BEIJING ORGANIZATION=55tuan GROUP=55tuan HOST=MARATHON-CA SUBJ="/C=$COUNTRY/ST=$PROVINCE/L=$CITY/O=$ORGANIZATION/OU=$GROUP/CN=$HOST" mkdir -p /etc/marathon/conf cd /etc/marathon/ # 生成ca私钥和ca自签证书 touch /etc/pki/CA/{serial,index.txt} #创建证书序列号文件、证书索引文件 echo 01 > /etc/pki/CA/serial # 第一次创建的时候需要给予证书序列号 openssl genrsa -out /tmp/ca.key 2048 openssl req -new -x509 -key /tmp/ca.key -out /tmp/ca.pem -days 36500 -subj $SUBJ # 生成客户端私钥和证书请求,当前key的口以防止本密钥泄漏后被人盗用 export MARATHON_KEY_PASSWORD=marathonwowopass openssl genrsa -des3 -out marathon.key -passout "env:MARATHON_KEY_PASSWORD" openssl req \ -reqexts SAN \ -config <(cat /etc/pki/tls/openssl.cnf \ <(printf "[SAN]\nsubjectAltName=DNS:marathon,DNS:*.cicinet.com")) \ -new -sha256 \ -key marathon.key -passin "env:MARATHON_KEY_PASSWORD" -out marathon.csr \ -subj "/C=CN/ST=BEIJING/L=BEIJING/O=55tuan/OU=55tuan/CN=marathon" \ -days 36500 # 客户端key签名 openssl ca -batch \ -extensions SAN \ -config <(cat /etc/pki/tls/openssl.cnf \ <(printf "[SAN]\nsubjectAltName=DNS:marathon,DNS:*.cicinet.com")) \ -days 36500 -notext \ -md sha256 -in marathon.csr -out marathon.crt \ -cert /tmp/ca.pem -key file /tmp/ca.key # 将证书转换为大多数浏览器都能识别的PKCS12文件 export MARATHON_PKCS_PASSWORD=marathonwowopass openssl pkcs12 -name marathon -inkey marathon.key -passin "env:MARATHON_KEY_PASSWORD" -in marathon.crt -password "env:MARATHON_PKCS_PASSWORD" -export -out marathon.pkcs12 # PKCS12文件转JKS export MARATHON_JKS_PASSWORD=marathonwowopass keytool -importkeystore -srckeystore marathon.pkcs12 -srcalias marathon -srcstorepass $MARATHON_PKCS_PASSWORD -srcstoretype PKCS12 -destkeystore marathon.jks -deststorepass $MARATHON_JKS_PASSWORD

- 启动marathon

# 手动启动测试 /usr/share/marathon/bin/marathon --master "zk://10.200.46.198:2181,10.200.46.199:2181,10.200.46.200:2181/mesos" --zk "zk://10.200.46.198:2181,10.200.46.199:2181,10.200.46.200:2181/marathon" --hostname "10.200.46.198" --http_credentials "wowotuan:wowotuan" --https_port "8443" --ssl_keystore_password "marathonwowopass" --ssl_keystore_path "/etc/marathon/marathon.jks" # unit service启动 systemctl start marathon

mesos-slave服务

docker安装

1挂载磁盘

parted -s /dev/vdb mklabel gpt parted -s /dev/vdb mkpart primary 0 100% mkfs.xfs /dev/vdb1 parted -s /dev/vdc mklabel gpt parted -s /dev/vdc mkpart primary 0 100% mkfs.xfs /dev/vdc1 mkdir /data echo "/dev/vdc1 /data xfs defaults 0 0" >>/etc/fstab mount -a

安装docker

yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-engine yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum makecache fast yum -y install docker-ce

2 准备docker devicemapper存储模式

#2. 安装依赖 #centos device-mapper-persistent-data, lvm2 #yum install -y yum-utils device-mapper-persistent-data xfsprogs lvm2 gcc* install zlib-devel curl-devel openssl-devel httpd-devel apr-devel apr-util-devel mysql-devel libffi-devel #4. 创建pv pvcreate /dev/vdb1<<EOF y EOF #5. 创建vg vgcreate docker /dev/vdb1 #6. 指定2个逻辑卷名称thinpool and thinpoolmeta。最后一个参数指定允许在空间不足时自动扩展数据或元数据的可用空间量,作为临时间隙 lvcreate --wipesignatures y -n thinpool docker -l 95%VG<<EOF y EOF lvcreate --wipesignatures y -n thinpoolmeta docker -l 1%VG<<EOF y EOF #7. 使用lvconvert命令将卷转换为精简池和精简池的元数据存储位置 lvconvert -y --zero n -c 512K --thinpool docker/thinpool --poolmetadata docker/thinpoolmeta #8. 通过配置文件自动扩容 cat >> /etc/lvm/profile/docker-thinpool.profile << EOF activation { thin_pool_autoextend_threshold=80 thin_pool_autoextend_percent=20 } EOF # 配置说明 # thin_pool_autoextend_threshold 该值是lvm尝试扩容到可用空间时,当前已空间使用量的百分比(100=禁止) # thin_pool_autoextend_percent 该值是thin池要扩容的百分比(100=禁止) #9. 应用新lvm profile 文件 lvchange --metadataprofile docker-thinpool docker/thinpool systemctl daemon-reload #10. 配置docker unit vim /usr/lib/systemd/system/docker.service [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network.target docker.socket Requires=docker.socket [Service] Type=notify ExecStart=/usr/bin/docker daemon \ --exec-opt native.cgroupdriver=systemd \ --tlsverify -H tcp://0.0.0.0:2376 -H unix:///var/run/docker.sock \ --tlscacert=/etc/docker/ca.pem --tlscert=/etc/docker/cert.pem \ --tlskey=/etc/docker/key.pem --storage-driver=devicemapper \ --storage-opt=dm.thinpooldev=/dev/mapper/docker-thinpool --storage-opt dm.use_deferred_removal=true MountFlags=slave LimitNOFILE=1048576 LimitNPROC=1048576 LimitCORE=infinity TimeoutStartSec=0 [Install] WantedBy=multi-user.target systemctl start docke #11. 启动docker systemctl start docker && systemctl enable docker docker login -u wowotuanops -p registrywowotuan -e [email protected] tc-yz-hd1.registry.me:443

3 18.06的包已经用了overlay2存储模式

4 docker daemon ssl 认证安装

- 方式1

#安装gem # yum install -y gem #使用taobao ruby gem镜像 $ gem sources --remove https://rubygems.org/ $ gem sources -a https://ruby.taobao.org/ #安装certificate_authority $ gem install certificate_authority 导入脚本(找我要,或者在gitlab上面找我写得ansible脚本) #生成证书(注意example.com是针对访问的应用的域名,如果是IP访问,这里就填写ip eg:ruby certgen.rb 10.9.120.20) $ ruby certgen.rb example.com CA certificates are in /root/.docker/ca Client certificates are in /root/.docker Server certificates are in /root/.docker/example.com #服务器端: 拷贝 /root/.docker/example.com下的三个文件 ca.pem cert.pem key.pem到 /etc/docker/目录下 配置docker启动使用ssl # cat /etc/sysconfig/docker OPTIONS='--tlsverify -H tcp://0.0.0.0:2376 -H unix:///var/run/docker.sock --tlscacert=/etc/docker/ca.pem --tlscert=/etc/docker/cert.pem --tlskey=/etc/docker/key.pem' 重启docker daemon进程 systemctl restart docker #客户端: 拷贝/root/.docker 下的三个文件 ca.pem cert.pem key.pem,到客户端的认证,如:java访问的认证。

- 方式2

创建TLS证书(根证书、服务端证书、客户端证书)

cd /usr/anyesu/docker # 内容比较多, 就写成一个shell脚本, 将需要绑定的服务端ip或域名做参数传入即可 vi tlscert.sh

脚本内容如下:

#/bin/bash # if [ $# != 1 ] ; then echo "USAGE: $0 [HOST_IP]" exit 1; fi #============================================# # 下面为证书密钥及相关信息配置,注意修改 # #============================================# PASSWORD="8#QBD2$!EmED&QxK" COUNTRY=CN PROVINCE=yourprovince CITY=yourcity ORGANIZATION=yourorganization GROUP=yourgroup NAME=yourname HOST=$1 SUBJ="/C=$COUNTRY/ST=$PROVINCE/L=$CITY/O=$ORGANIZATION/OU=$GROUP/CN=$HOST" echo "your host is: $1" # 1.生成根证书RSA私钥,PASSWORD作为私钥文件的密码 openssl genrsa -passout pass:$PASSWORD -aes256 -out ca-key.pem 4096 # 2.用根证书RSA私钥生成自签名的根证书 openssl req -passin pass:$PASSWORD -new -x509 -days 365 -key ca-key.pem -sha256 -out ca.pem -subj $SUBJ #============================================# # 用根证书签发server端证书 # #============================================# # 3.生成服务端私钥 openssl genrsa -out server-key.pem 4096 # 4.生成服务端证书请求文件 openssl req -new -sha256 -key server-key.pem -out server.csr -subj "/CN=$HOST" # 5.使tls连接能通过ip地址方式,绑定IP echo subjectAltName = IP:127.0.0.1,IP:$HOST > extfile.cnf # 6.使用根证书签发服务端证书 openssl x509 -passin pass:$PASSWORD -req -days 365 -sha256 -in server.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out server-cert.pem -extfile extfile.cnf #============================================# # 用根证书签发client端证书 # #============================================# # 7.生成客户端私钥 openssl genrsa -out key.pem 4096 # 8.生成客户端证书请求文件 openssl req -subj '/CN=client' -new -key key.pem -out client.csr # 9.客户端证书配置文件 echo extendedKeyUsage = clientAuth > extfile.cnf # 10.使用根证书签发客户端证书 openssl x509 -passin pass:$PASSWORD -req -days 365 -sha256 -in client.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out cert.pem -extfile extfile.cnf #============================================# # 清理 # #============================================# # 删除中间文件 rm -f client.csr server.csr ca.srl extfile.cnf # 转移目录 mkdir client server cp {ca,cert,key}.pem client cp {ca,server-cert,server-key}.pem server rm {cert,key,server-cert,server-key}.pem # 设置私钥权限为只读 chmod -f 0400 ca-key.pem server/server-key.pem client/key.pem

执行服务端配置

# 给脚本添加运行权限 chmod +x tlscert.sh HOST_IP=127.0.0.1 ./tlscert.sh $HOST_IP # 客户端需要的证书保存在client目录下, 服务端需要的证书保存在server目录下 sudo cp server/* /etc/docker # 修改配置 sudo vi /etc/default/docker # 改为 DOCKER_OPTS="--selinux-enabled --tlsverify --tlscacert=/etc/docker/ca.pem --tlscert=/etc/docker/server-cert.pem --tlskey=/etc/docker/server-key.pem -H=unix:///var/run/docker.sock -H=0.0.0.0:2375" # 重启docker sudo service docker restart

正确的访问方式:

# 客户端加tls参数访问 docker --tlsverify --tlscacert=client/ca.pem --tlscert=client/cert.pem --tlskey=client/key.pem -H tcp://127.0.0.1:2375 version # Docker API方式访问 curl https://127.0.0.1:2375/images/json --cert client/cert.pem --key client/key.pem --cacert client/ca.pem # 简化客户端调用参数配置 sudo cp client/* ~/.docker # 追加环境变量 echo -e "export DOCKER_HOST=tcp://$HOST_IP:2375 DOCKER_TLS_VERIFY=1" >> ~/.bashrc sudo docker version

切记, 要保护好客户端证书, 这是连接服务端的凭证。另外根证书私钥也要保存好, 泄漏之后就能签发客户端证书了。

认证模式

1. 服务端认证模式 选项 说明 tlsverify, tlscacert, tlscert, tlskey 向客户端发送服务端证书, 校验客户端证书是否由指定的CA(自签名根证书)签发 tls, tlscert, tlskey 向客户端发送服务端证书, 不校验客户端证书是否由指定的CA(自签名根证书)签发 2. 客户端认证模式 选项 说明 tls 校验服务端证书是否由 公共的CA机构签发 tlsverify, tlscacert 校验服务端证书是否由指定的CA(自签名根证书)签发 tls, tlscert, tlskey 使用客户端证书进行身份验证, 但不校验服务端证书是否由指定的CA(自签名根证书)签发 tlsverify, tlscacert, tlscert, tlskey 使用客户端证书进行身份验证且校验服务端证书是否由指定的CA(自签名根证书)签发

mesos-slave安装

host_ip=$(/usr/sbin/ifconfig eth0 | sed -n '/inet /p'| awk '{print $2}') host_name=$(sed -rn "/$(/usr/sbin/ifconfig eth0 | sed -n '/inet /p'| awk '{print $2}')/s@[[:digit:]\.]+[[:space:]]+([[:alnum:]_-]+).*@\1@gp" /etc/hosts) sudo rpm -Uvh http://repos.mesosphere.com/el/7/noarch/RPMS/mesosphere-el-repo-7-1.noarch.rpm sudo yum -y install mesos # 配置mesos-slave的--master参数 echo "zk://10.200.46.198:2181,10.200.46.199:2181,10.200.46.200:2181/mesos" >/etc/mesos/zk # 配置mesos-slave的--hostname参数 echo "$host_ip" > /etc/mesos-slave/ip echo "$host_ip" > /etc/mesos-slave/hostname # 如果想在marathon上运行docker,需要在slave上额外的配置 #指定使用docker容器化,配置slave的 --containerizers参数 echo 'docker,mesos' > /etc/mesos-slave/containerizers # 设置机器属性 mkdir /etc/mesos-slave/attributes echo "$host_ip" >/etc/mesos-slave/attributes/host echo 'main' > /etc/mesos-slave/attributes/hostgroup systemctl enable mesos-slave && systemctl stop mesos-master && systemctl disable mesos-master systemctl start mesos-slave [root@nginx001 ~]# curl -s -u wowotuan:wowotuan 172.16.0.22:8080/v2/apps?embed=apps.count|jq '.apps[]|.id ,.instances' |sed -n '{N;s/\n/ /p}' |sort -nr -k2 "/yuncapital-yzprod" 2 ... ...

Docker仓库

#1. 拉取docker registry docker pull registry:2.5.0 #2. 安装docker-compose curl -L "https://github.com/docker/compose/releases/download/1.23.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose chmod +x /usr/local/bin/docker-compose #3. 通过distribution 来安装registry git clone https://github.com/docker/distribution.git cd distribution/ git checkout release/2.0 cd contrib/compose/nginx/ NAME=my.registry.cici.me COUNTRY=CN PROVINCE=BEIJING CITY=BEIJING ORGANIZATION=$NAME GROUP=$NAME HOST=$NAME EMAIL[email protected] SUBJ="/C=$COUNTRY/ST=$PROVINCE/L=$CITY/O=$ORGANIZATION/OU=$GROUP/CN=$HOST/emailAddress=$EMAIL" openssl genrsa -out $NAME.pem 2048 openssl req -new -sha256 -key $NAME.pem -out $NAME.csr -subj "$SUBJ" openssl x509 -req -days 3650 -in $NAME.csr -signkey $NAME.pem -out $NAME.crt #4. 安装httpd-tools 生成密码 yum install httpd-tools -y htpasswd -c .htpasswd wowotuanops registrywowotuan #5. 修改dockerfile 删除COPY docker-registry.conf /etc/nginx/docker-registry.conf echo "COPY .htpasswd /etc/nginx/.htpasswd" >>Dockerfile echo "COPY $NAME.pem /etc/nginx/$NAME.pem" >>Dockerfile echo "COPY $NAME.crt /etc/nginx/$NAME.crt" >>Dockerfile #6. 编辑registry.conf文件 #注意:mesos集群加hosts解析 cat >registry.conf<<EOF # Docker registry proxy for api versions 1 and 2 upstream docker-registry-v2 { ip_hash; server registryv2:5000; } upstream docker-registry-api { ip_hash; server registryapi:5000; } # No client auth or TLS server { listen 5000; server_name $NAME.me; # disable any limits to avoid HTTP 413 for large image uploads client_max_body_size 0; # required to avoid HTTP 411: see Issue #1486 (https://github.com/docker/docker/issues/1486) chunked_transfer_encoding on; # for ssl ssl on; ssl_certificate /etc/nginx/$NAME.crt; ssl_certificate_key /etc/nginx/$NAME.pem; location /v2/ { # Do not allow connections from docker 1.5 and earlier # docker pre-1.6.0 did not properly set the user agent on ping, catch "Go *" user agents auth_basic "registry v2 realm"; auth_basic_user_file /etc/nginx/.htpasswd; add_header 'Docker-Distribution-Api-Version' 'registry/2.0' always; include docker-registry-v2.conf; } } server { listen 5001; server_name $NAME.me; # disable any limits to avoid HTTP 413 for large image uploads client_max_body_size 0; # required to avoid HTTP 411: see Issue #1486 (https://github.com/docker/docker/issues/1486) chunked_transfer_encoding on; location /search/imagesinfo { proxy_set_header Host $http_host; # required for docker client's sake proxy_set_header X-Real-IP $remote_addr; # pass on real client's IP proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_read_timeout 900; proxy_pass http://docker-registry-api; } location /repositories { proxy_set_header Host $http_host; # required for docker client's sake proxy_set_header X-Real-IP $remote_addr; # pass on real client's IP proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_read_timeout 900; proxy_pass http://docker-registry-api; } } EOF #7. 修改docker-compose.yml cd .. cat >docker-compose.yml <<\EOF nginx: build: "nginx" ports: - "5000:5000" - "443:5000" - "80:5001" links: - registryv2:registryv2 - registryapi:registryapi registryv2: image: myegistry.55tuan.me:443/registry:2.5.0 ports: - "5000" volumes: - /data/registryv2/registry:/etc/docker/registry - /data/registryv2/data:/var/lib/registry registryapi: image: myregistry.55tuan.me:443/regisitryweb:v1 ports: - "5001:5000" volumes: - /data/registryv2/data:/data/registryv2/data EOF mkdir /data/registryv2/{registry,data} -pv cat >/data/registryv2/registry/config.yml<<\EOF version: 0.1 log: level: info fields: service: registry #notifications: ## endpoints: ## - name: local-python-8000 ## url: http://10.9.120.24:8000/ ## timeout: 1s ## threshold: 10 ## backoff: 1s ## disabled: false storage: cache: blobdescriptor: inmemory filesystem: rootdirectory: /var/lib/registry delete: enabled: true http: addr: :5000 EOF

添加节点脚本-add-mesos.sh

cat add-mesos.sh #!/bin/bash ###time:2018-9-19 ip=`ifconfig eth0|grep 'inet '|awk '{print $2}'` echo """ 192.168.100.247 mesos-master1 192.168.101.150 mesos-master2 192.168.101.151 mesos-master3 192.168.100.249 mesos-slave1 192.168.100.251 mesos-slave2 192.168.100.250 mesos-slave3 192.168.101.152 mesos-slave4 192.168.101.153 mesos-slave5 192.168.101.166 mesos-slave6 192.168.101.167 mesos-slave7 192.168.101.181 mesos-slave8 192.168.101.182 mesos-slave9 192.168.101.183 mesos-slave10 192.168.101.184 mesos-slave11 192.168.101.192 mesos-slave12 192.168.101.193 mesos-slave13 192.168.101.194 mesos-slave14 192.168.101.195 mesos-slave15 116.213.103.33 msg.cici 192.168.100.44 ldapserver-44.zke.cici 192.168.101.106 ldapserver-106.zke.cici 192.168.101.184 mesos-slave-11 """ >> /etc/hosts echo """ nameserver 192.168.100.44 nameserver 192.168.101.106 """ > /etc/resolv.conf rpm -Uhv http://repos.mesosphere.com/el/7/noarch/RPMS/mesosphere-el-repo-7-2.noarch.rpm rpm -ivh https://mirrors.aliyun.com/epel/epel-release-latest-7.noarch.rpm yum install -y yum-utils device-mapper-persistent-data xfsprogs lvm2 gcc* install zlib-devel curl-devel openssl-devel httpd-devel apr-devel apr-util-devel mysql-devel libffi-devel if [ ! -b "/dev/vdb1" ];then parted -s /dev/vdb mklabel gpt parted -s /dev/vdb mkpart primary 0 100% mkfs.xfs /dev/vdb1 else echo "/dev/vdb1 exist" fi if [ ! -b "/dev/vdc1" ];then parted -s /dev/vdc mklabel gpt parted -s /dev/vdc mkpart primary 0 100% mkfs.xfs /dev/vdc1 else echo "/dev/vdc1 exist" fi if [ ! -d "/data" ];then mkdir /data fi cat /etc/fstab |grep -E "/data|/dev/vdc1" if [ $? ];then echo "/dev/vdc1 /data xfs defaults 0 0" >>/etc/fstab mount -a fi ###devicemapper pvcreate /dev/vdb1<<EOF y EOF vgcreate docker /dev/vdb1 lvcreate --wipesignatures y -n thinpool docker -l 95%VG<<EOF y EOF lvcreate --wipesignatures y -n thinpoolmeta docker -l 1%VG<<EOF y EOF lvconvert -y --zero n -c 512K --thinpool docker/thinpool --poolmetadata docker/thinpoolmeta cat >> /etc/lvm/profile/docker-thinpool.profile << EOF activation { thin_pool_autoextend_threshold=80 thin_pool_autoextend_percent=20 } EOF lvchange --metadataprofile docker-thinpool docker/thinpool cd wget http://192.168.101.104/mesos/ruby-2.2.7.tar.gz tar -zxf ruby-2.2.7.tar.gz cd ruby-2.2.7 ./configure make -j 3 make install cd /root/ruby-2.2.7/ext/zlib ruby ./extconf.rb make make install cd /root/ruby-2.2.7/ext/openssl ln -s /root/ruby-2.2.7/include/ / ruby extconf.rb make -j 3 make install cd /usr/local/bin/ ./gem sources --remove https://rubygems.org/ gem sources -a https://gems.ruby-china.com/ ./gem install certificate_authority cd wget http://192.168.101.104/mesos/certgen1.rb ruby certgen1.rb $ip if [ ! -d "/root/.docker/$ip/" ];then cd ruby certgen1.rb $ip fi mkdir /etc/docker cp /root/.docker/$ip/* /etc/docker cd wget http://192.168.101.104/mesos/docker-engine-1.10.3-1.el7.centos.x86_64.rpm wget http://192.168.101.104/mesos/docker-engine-selinux-1.10.3-1.el7.centos.noarch.rpm wget -O /etc/pki/ca-trust/source/anchors/hb2.registry.cici.crt http://192.168.101.104/mesos/hb2.registry.cici.crt update-ca-trust extract yum localinstall -y docker-engine* wget -O /usr/lib/systemd/system/docker.service http://192.168.101.104/mesos/docker.service systemctl daemon-reload systemctl start docker && systemctl enable docker docker login -u wowotuanops -p registrywowotuan -e [email protected] hb2.registry.cici:443 if [ $? -eq 0 ];then cd /root && tar -czvf /etc/docker.tar.gz .docker/ cd else echo "docker login err" fi yum install mesos -y mkdir -p /etc/mesos-slave/attributes echo $ip > /etc/mesos-slave/attributes/host echo 'main' > /etc/mesos-slave/attributes/hostgroup echo 'zk://192.168.100.247:2181,192.168.101.150:2181,192.168.101.151:2181/mesos' > /etc/mesos/zk echo $ip > /etc/mesos-slave/hostname echo $ip > /etc/mesos-slave/ip echo 'docker,mesos' > /etc/mesos-slave/containerizers #systemctl enable mesos-slave && systemctl start mesos-slave && systemctl disable mesos-master wget -O /usr/bin/consul http://192.168.101.104/mesos/consul wget -O /etc/systemd/system/consul.service http://192.168.101.104/mesos/consul.service chmod +x /usr/bin/consul sed -i "s/IP/$ip/g" /etc/systemd/system/consul.service systemctl daemon-reload #systemctl start consul && systemctl enable consul docker run --restart='always' -d --name=registrator --hostname=${ip} --volume=/var/run/docker.sock:/tmp/docker.sock hb2.registry.cici:443/registrator:v7 -ip ${ip} consul://${ip}:8500 pip install prometheus_client cd /home wget http://192.168.101.104/mesos/sh.tar.gz tar zxf sh.tar.gz cd /data wget http://192.168.101.104/mesos/check_jvm.tar.gz tar -zxf check_jvm.tar.gz echo """ */1 * * * * /usr/bin/python /home/sh/altersms.py 0 */6 * * * cd /data/check_jvm/ && /bin/sh /data/check_jvm/check_jvm_Running.sh """>>/var/spool/cron/root

nginx with consul-template

https://book-consul-guide.vnzmi.com/11_consul_template.html

# nginx with consul-template # 1. 查看marathon,并找出对应的应用 访问http://IP:8080/ 找到对应应用的configuration,如container_uuid=cross-bmserver-yzprod-10580 # 2. 编写consul-template模板 如 [root@tc-xy-nginx001 vhosts]# head xr-cicinet.ctmpl upstream yzbizcenter-api-yzprod-11045 { {{range service "yzbizcenter-api-yzprod-11045"}}server {{.Address}}:{{.Port}};{{else}}server 1.1.1.1:8000;{{end}} } # 3. 利用脚本生成consul-template服务 [root@tc-xy-nginx001 ~]# cd /home/sh/add-template/ [root@tc-xy-nginx001 add-template]# ll total 112 -rwxr-xr-x 1 root root 579 Dec 14 14:07 add-nginx-consul-template.sh -rw-r--r-- 1 root root 213 Jan 14 17:30 consul.cfg -rw-r--r-- 1 root root 453 Dec 14 14:00 consul-template.service [root@tc-xy-nginx001 add-template]# cat add-nginx-consul-template.sh #!/bin/bash path=../add-template if [ ! -n "$1" ] ;then echo $"usage: $0 nginx-templates-name" else if [ -f /etc/$1.cfg -a -f /etc/systemd/system/consul-template-$1.service ];then echo "$1 already exists!!!" exit 1 else cp -f consul.cfg $1.cfg sed -i s/TEMPLATE/$1/g $1.cfg cp -f $1.cfg /etc/ cp -f consul-template.service consul-template-$1.service sed -i s/TEMPLATE/$1/g consul-template-$1.service cp -f consul-template-$1.service /etc/systemd/system/ #systemctl daemon-reload #systemctl enable consul-template-$1.service fi fi [root@tc-xy-nginx001 add-template]# cat consul.cfg consul = "192.168.100.9:8500" template { source = "/usr/local/nginx/conf/vhosts/TEMPLATE.ctmpl" destination = "/usr/local/nginx/conf/vhosts/TEMPLATE.conf" command = "/usr/local/nginx/sbin/nginx -s reload" }

注意:template会实时发现consul集群中容器变化,如果有变化,则重载nginx。

[root@tc-xy-nginx001 add-template]# cat consul-template.service # this is consul-template systemd script # Version: 1.0 # Descripthin: consul [Unit] Description=Consul-template After=network.target [Service] ExecStart=/usr/bin/consul-template --config /etc/TEMPLATE.cfg #ExecStop=/usr/bin/killall -9 /usr/bin/consul-template ExecStop=/usr/bin/ps aux | /usr/bin/grep "consul-template"| /usr/bin/grep TEMPLATE | /usr/bin/grep -v grep | /usr/bin/awk '{print $2}' | /usr/bin/xargs kill [Install] WantedBy=default.target