Kubernetes: k8s 运维篇-k8s容器日志收集

- TAGS: Kubernetes

k8s 运维篇-k8s容器日志收集

说明

K8s集群需要收集哪些日志?

- 系统和 k8s 组件日志

- /var/log/messages

- apiserver,contorllor manager

- 业务应用程序日志

- 云原生-控制台日志

- 非云原生-容器内日志文件

收集日志常用的技术栈

- 传统架构

- ELK 技术栈 Elasticsearch+Logstash+Kibana

logstash 缺点:java 语言,占用资源、语法复杂

- Kubernetes

- EFK 技术栈 Elasticsearch+Fluentd+Kibana

- Filebeat 占用资源小,sidecar 形式注入容器,收集容器内日志

- Loki 新贵

fluentd 优点:k8s 中使用 fluentd 代替 Logstash,配置简单,收集控制台输出日志。

Loki:不依赖 EFK,可直接在 grafana 查询日志。

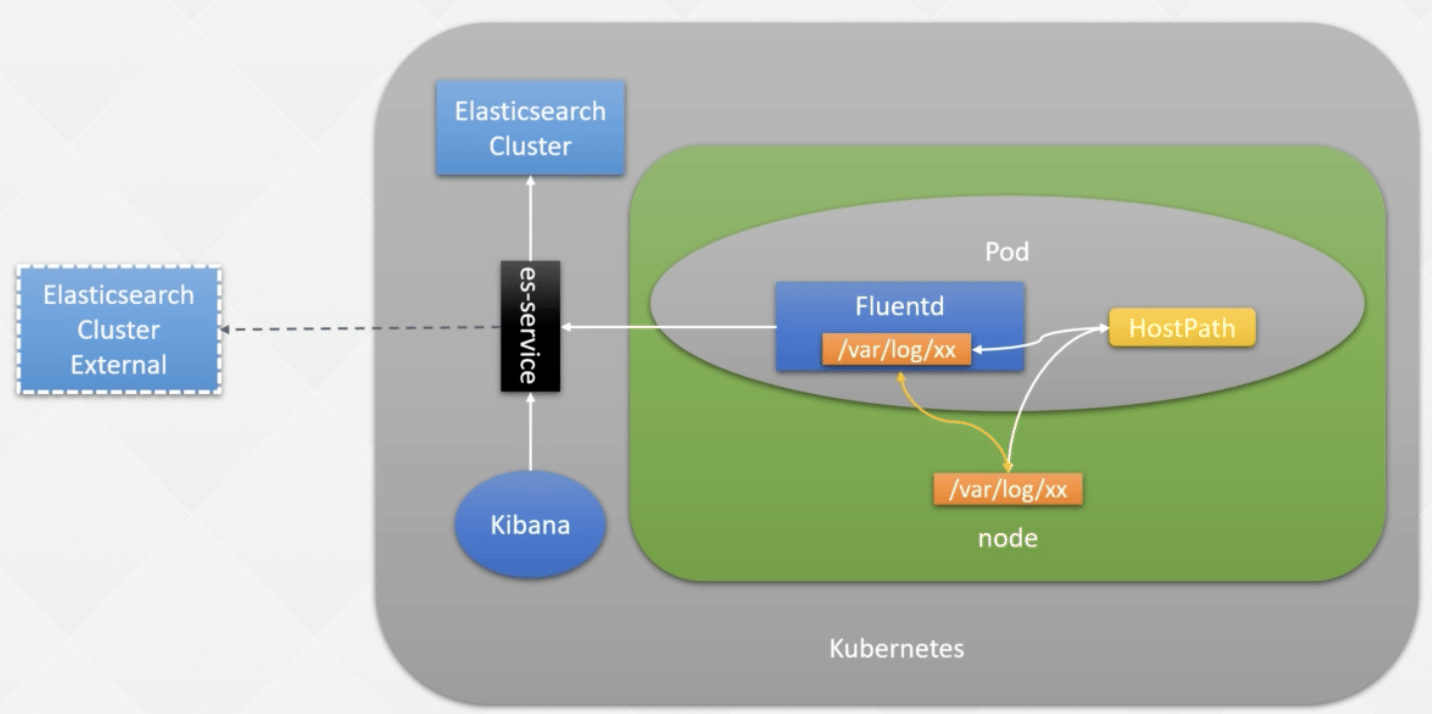

使用 EFK 收集控制台日志

ES+Fluentd+Kibana架构解析

部署EFK Stack收集K8s日志

https://github.com/kubernetes/kubernetes/tree/release-1.23/cluster/addons/fluentd-elasticsearch

收集宿主机日志 /var/log

下载需要部署的文件

git clone https://github.com/dotbalo/k8s.git

cd k8s/efk-7.10.2/

创建 EFK 所用的命令空间

kubectl create -f create-logging-namespace.yaml

创建 ES 集群

kubectl create -f es-service.yaml kubectl create -f es-statefulset.yaml

创建 kibana

kubectl create -f kibana-deployment.yaml kubectl create -f kibana-service.yaml # 如果访问有问题,kibana-deployment.yaml 关掉里面的访问模式proxy # 注释以下2行(kibana-deployment.yaml ) #- name: SERVER_BASEPATH # value: /api/v1/namespaces/kube-system/services/kibana-logging/proxy

由于在 k8s 中,我们可能并不需要对所有机器采集日志,所以可以更改 fluentd 的部署文件,添加节点绑定:

kubectl create -f fluentd-es-configmap.yaml -f fluentd-es-ds.yaml

Kibana 使用

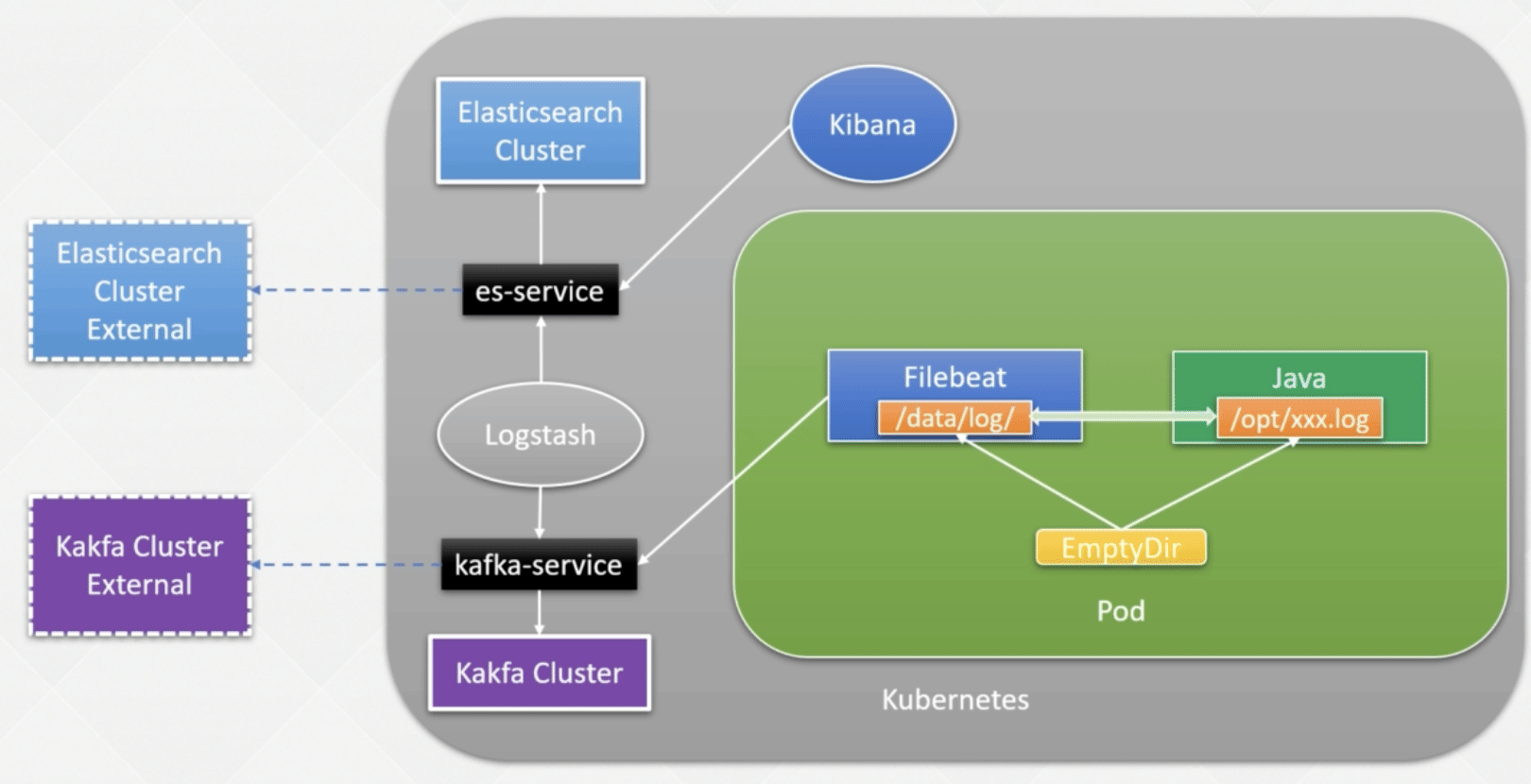

使用 Filebeat 收集自定义文件日志

Filebeat+Kafka+Logstash+ES架构解析

Pod 中有 2 个容器

- 业务容器 日志未输出到控制台,输出到指定文件

- 日志容器 volume 使用 EmptyDir

创建 Kafka 和 Logstash

首先需要部署 Kafka 和 logstash 至 k8s 集群。如果企业内已经有比较成熟的技术栈,可以无需部署,直接将 filebeat 的输出指向外部 kafka 集群即可:

参考: https://github.com/dotbalo/k8s/tree/master/fklek/7.x

cd filebeat

helm install zookeeper zookeeper/ -n logging

helm install kafka kafka/ -n logging

待 pod 都正常后,创建 logstash 服务:

kubectl create -f logstash-cm.yaml -f logstash-service.yaml -f logstash.yaml

logstash-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-configmap

data:

logstash.yml: |

http.host: "0.0.0.0"

path.config: /usr/share/logstash/pipeline

logstash.conf: |

# all input will come from filebeat, no local logs

input {

kafka {

enable_auto_commit => true

auto_commit_interval_ms => "1000"

bootstrap_servers => "kafka:9092"

topics => ["test-filebeat"]

codec => json

}

}

output {

stdout{ codec=>rubydebug}

if [fields][pod_namespace] =~ "public-service" {

elasticsearch {

hosts => ["elasticsearch-logging:9200"]

index => "%{[fields][pod_namespace]}-s-%{+YYYY.MM.dd}"

}

} else {

elasticsearch {

hosts => ["elasticsearch-logging:9200"]

index => "no-index-%{+YYYY.MM.dd}"

}

}

}

logstash-service.yaml

kind: Service

apiVersion: v1

metadata:

name: logstash-service

spec:

selector:

app: logstash

ports:

- protocol: TCP

port: 5044

targetPort: 5044

type: ClusterIP

logstash-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: logstash-deployment

spec:

selector:

matchLabels:

app: logstash

replicas: 1

template:

metadata:

labels:

app: logstash

spec:

containers:

- name: logstash

image: logstash:7.4.2

ports:

- containerPort: 5044

volumeMounts:

- name: config-volume

mountPath: /usr/share/logstash/config

- name: logstash-pipeline-volume

mountPath: /usr/share/logstash/pipeline

volumes:

- name: config-volume

configMap:

name: logstash-configmap

items:

- key: logstash.yml

path: logstash.yml

- name: logstash-pipeline-volume

configMap:

name: logstash-configmap

items:

- key: logstash.conf

path: logstash.conf

需要注意 logstash-cm.yaml 文件中的一些配置:

- input: 数据来源,示例配置的是 kafka

- input.kakfa.topics: kafka 的 topic,需要和 filebeat 输出的 topic 一致

- output: 数据输出至哪里,示例输出至 ES 集群

使用Filebeat采集容器内日志文件

filebeat-cm.yaml

cd /root/install-some-apps/efk mkdir filebeat && cd filebeat

vim filebeat-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeatconf

data:

filebeat.yml: |-

filebeat.inputs:

- input_type: log

paths:

- /data/log/*/*.log

tail_files: true

fields:

pod_name: '${podName}'

pod_ip: '${podIp}'

pod_deploy_name: '${podDeployName}'

pod_namespace: '${podNamespace}'

tags: [test-filebeat]

output.kafka:

hosts: ["kafka:9092"]

topic: "test-filebeat"

codec.json:

pretty: false

keep_alive: 30s

kubectl create -f filebeat-cm.yaml -n public-service

接下来创建一个模拟程序

kubectl create -f app-filebeat.yaml -nlogging

该程序会一直在/opt/date.log 文件输出当前日期,配置如下

command: - sh - -c - while true; do date >> /opt/date.log; sleep 2; done

/opt 目录数据共享

app-filebeat.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app

labels:

app: app

env: release

spec:

selector:

matchLabels:

app: app

replicas: 1

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 0

maxSurge: 1

# minReadySeconds: 30

template:

metadata:

labels:

app: app

spec:

containers:

- name: filebeat

image: registry.cn-beijing.aliyuncs.com/dotbalo/filebeat:7.10.2

resources:

requests:

memory: "100Mi"

cpu: "10m"

limits:

cpu: "200m"

memory: "300Mi"

imagePullPolicy: IfNotPresent

env:

- name: podIp

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: podName

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: podNamespace

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: podDeployName

value: app

- name: TZ

value: "Asia/Shanghai"

securityContext:

runAsUser: 0

volumeMounts:

- name: logpath

mountPath: /data/log/app/

- name: filebeatconf

mountPath: /usr/share/filebeat/filebeat.yml

subPath: usr/share/filebeat/filebeat.yml

- name: app

image: registry.cn-beijing.aliyuncs.com/dotbalo/alpine:3.6

imagePullPolicy: IfNotPresent

volumeMounts:

- name: logpath

mountPath: /opt/

env:

- name: TZ

value: "Asia/Shanghai"

- name: LANG

value: C.UTF-8

- name: LC_ALL

value: C.UTF-8

command:

- sh

- -c

- while true; do date >> /opt/date.log; sleep 2; done

volumes:

- name: logpath

emptyDir: {}

- name: filebeatconf

configMap:

name: filebeatconf

items:

- key: filebeat.yml

path: usr/share/filebeat/filebeat.yml

es & kibana

#+end_src es 和 kibana 用的上面安装的 #+end_src

实现Pod名称和命名空间检索日志

登录 kibana ,添加 filebeat 的索引即可查看日志。点击菜单栏–> Stack Management

Loki

Loki架构解析

使用Loki收集K8s日志

https://grafana.com/docs/loki/latest/installation/helm/install-scalable/

Loki 提供了 helm 的安装方式,可以直接下载包进行安装。

helm repo add grafana https://grafana.github.io/helm-charts helm repo update

创建 loki namespace

kubectl create ns loki

创建 loki stack:

helm upgrade --install loki grafana/loki --set grafana.enalbed=true --set grafana.service.type=NodePort -n loki # 没有 grafana 设置为 true

访问grafana

查看grafana 密码(账号 admin):

kubectl get secret --namespace loki loki-grafana -o jsonpath="{.data.admin-password}" | base64 -d; echo

grfana 页面中点击左边栏 Explore 找到 loki 来查询

Loki语法入门

语法 prometheus 语法相似

支持 pipline,如过滤 avg 字段

{namespace="kube-system", pod=~"calico.*"} |~ "avg"

格式化后过滤,如过滤 avg 字段,同时其中 longest 大于 18ms 的行

{namespace="kube-system", pod=~"calico.*"} |~ "avg" |logfmt| longest > 18ms

样例

filebeat+logstash 示例1

从 kafka 收集数据输出到 ES

logstash-configmap.yaml

apiVersion: v1

data:

jvm.options: |

#-Xms16g

#-Xmx16g

-XX:+UseG1GC

-XX:MaxGCPauseMillis=50

-Djava.awt.headless=true

-Dfile.encoding=UTF-8

-Djruby.compile.invokedynamic=true

-Djruby.jit.threshold=0

-Djruby.regexp.interruptible=true

-XX:+HeapDumpOnOutOfMemoryError

-Djava.security.egd=file:/dev/urandom

-Dlog4j2.isThreadContextMapInheritable=true

logstash.conf: |

input {

kafka {

bootstrap_servers => "kafka.public-middleware:9092"

#client_id => "taskcenter-prd-new-${POD_NAME}"

client_id => "taskcenter-prd-client"

#group_id => "taskcenter-prd"

group_id => "growth-prd"

topics_pattern => "taskcenter-prd.*" # kafka topics 正则索引匹配

consumer_threads => 6

fetch_max_bytes => "204857600"

fetch_max_wait_ms => "1000"

decorate_events => basic

auto_offset_reset => "latest"

codec => "json"

max_poll_records => "5000"

}

kafka {

bootstrap_servers => "kafka.public-middleware:9092"

#client_id => "messagcenter-prd-new-${POD_NAME}"

client_id => "messagcenter-prd-client"

#group_id => "messagecenter-prd"

group_id => "growth-prd"

topics_pattern => "messagecenter-prd.*" # kafka topics 正则索引匹配

consumer_threads => 6

fetch_max_wait_ms => "1000"

decorate_events => basic

auto_offset_reset => "latest"

codec => "json"

max_poll_records => "5000"

}

kafka {

bootstrap_servers => "kafka.public-middleware:9092"

#client_id => "clevertap-prd-new-${POD_NAME}"

client_id => "clevertap-prd-client"

#group_id => "clevertap-prd"

group_id => "growth-prd"

topics_pattern => "clevertap-prd.*" # kafka topics 正则索引匹配

consumer_threads => 6

fetch_max_bytes => "204857600"

fetch_max_wait_ms => "1000"

decorate_events => basic

auto_offset_reset => "latest"

codec => "json"

max_poll_records => "5000"

}

kafka {

bootstrap_servers => "kafka.public-middleware:9092"

#client_id => "rummy-sc-prd-new-${POD_NAME}"

client_id => "rummy-sc-prd-client"

#group_id => "rummy-sc-prd"

group_id => "growth-prd"

topics_pattern => "rummy-sc-prd.*" # kafka topics 正则索引匹配

consumer_threads => 6

fetch_max_wait_ms => "1000"

decorate_events => basic

auto_offset_reset => "latest"

codec => "json"

max_poll_records => "5000"

}

}

filter {

if [kafka_topic] =~ "clevertap-prd" {

grok {

# patterns_dir => ["/etc/logstash/conf.d"]

match => { "message" => "(?<mydate>\d+-\d+-\d+\s\d+\:\d+\:\d+\.\d{3})?(\s\[.+\]) %{LOGLEVEL:loglevel} (?<line>\[.*\]) (?<traceid>\[.*\]) %{GREEDYDATA:class}" }

}

date {

match => ["mydate", "yyyy-MM-dd HH:mm:ss.SSS"]

target => "@timestamp"

timezone => "Asia/Kolkata"

}

}

if [kafka_topic] =~ "taskcenter-prd" {

grok {

# patterns_dir => ["/etc/logstash/conf.d"]

match => { "message" => "(?<mydate>\d+-\d+-\d+\s\d+\:\d+\:\d+\.\d{3})?(\s\[.+\]) %{LOGLEVEL:loglevel} (?<line>\[.*\]) (?<traceid>\[.*\]) %{GREEDYDATA:class}" }

}

date {

match => ["mydate", "yyyy-MM-dd HH:mm:ss.SSS"]

target => "@timestamp"

timezone => "Asia/Kolkata"

}

}

if [kafka_topic] =~ "messagecenter-prd" {

grok {

# patterns_dir => ["/etc/logstash/conf.d"]

match => { "message" => "(?<mydate>\d+-\d+-\d+\s\d+\:\d+\:\d+\.\d{3})?(\s\[.+\]) %{LOGLEVEL:loglevel} (?<line>\[.*\]) (?<traceid>\[.*\]) %{GREEDYDATA:class}" }

}

date {

match => ["mydate", "yyyy-MM-dd HH:mm:ss.SSS"]

target => "@timestamp"

timezone => "Asia/Kolkata"

}

#mutate {

# split => {"class" => ":"}

# add_field => { "error_class" => "%{[class][0]}" }

#}

}

if [kafka_topic] =~ "rummy-sc-prd" {

grok {

match => { "message" => "(?<mydate>\d+-\d+-\d+\s\d+\:\d+\:\d+\.\d{3})?(\s\[.+\]) %{LOGLEVEL:loglevel} (?<line>\[.*\]) (?<traceid>\[.*\]) (?<logv>(-\s\S+\d|-\s\S+)) (param: AppLogReqParam\(userId=(?<userid>\d+( |)),\scontent=(?<content_json>(\[.*\]|\{.*\})),\stype=(?<apptype>.*)\)|)%{GREEDYDATA:class}" }

}

date {

match => ["mydate", "yyyy-MM-dd HH:mm:ss.SSS"]

target => "@timestamp"

timezone => "Asia/Kolkata"

}

}

mutate {

remove_field => ["agent","ecs","host"]

}

}

output {

#if "-dev" in [kafka_topic] {

#if [kafka_topic] =~ "-dev(|\s+)" {

elasticsearch {

#hosts => ["elasticsearch-log-master.public-middleware:9200"]

hosts => ["elasticsearch-growth.public-middleware:9200"]

#index => "%{kafka_topic}-%{service}-%{+YYYY.MM.dd}"

index => "%{[@metadata][kafka][topic]}-%{service}-%{+YYYY.MM.dd}"

}

#}

#if [kafka_topic] =~ "-prd(|\s+)" {

# elasticsearch {

# hosts => ["elasticsearch-log-master.public-middleware:9200"]

# index => "%{kafka_topic}-%{+YYYY.MM.dd}"

# }

#}

}

logstash.yml: |

http.host: "0.0.0.0"

path.config: /usr/share/logstash/pipeline

pipeline.workers: 20

pipeline.batch.size: 50240

pipeline.batch.delay: 10

pipeline.ecs_compatibility: disabled

config.reload.automatic: true

config.reload.interval: 300s

kind: ConfigMap

metadata:

name: logstash-growth-prd

namespace: logstash

logstash-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: logstash-growth-prd

name: logstash-growth-prd

namespace: logstash

spec:

replicas: 2

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

selector:

matchLabels:

app: logstash-growth-prd

template:

metadata:

labels:

app: logstash-growth-prd

spec:

nodeSelector:

type: ops-prod-logstash

#type: ops-prod-infra

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

labelSelector:

matchLabels:

app: logstash-growth-prd

namespaces:

- logstash

topologyKey: kubernetes.io/hostname

weight: 1

containers:

- env:

- name: ES_HOSTS

value: es:9200

#- name: MY_NODE_NAME

# valueFrom:

# fieldRef:

# apiVersion: v1

# fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: TZ

value: Asia/Calcutta

image: docker.elastic.co/logstash/logstash:7.17.8

imagePullPolicy: IfNotPresent

name: logstash-growth-prd

ports:

- containerPort: 5044

protocol: TCP

#resources:

# requests:

# memory: "10Gi"

# cpu: "1"

# limits:

# memory: "30Gi"

# cpu: "16"

securityContext:

privileged: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/share/logstash/config/jvm.options

name: conf

subPath: jvm.options

- mountPath: /usr/share/logstash/config/logstash.yml

name: conf

subPath: logstash.yml

- mountPath: /usr/share/logstash/pipeline/logstash.conf

name: conf

subPath: logstash.conf

- mountPath: /etc/localtime

name: time

restartPolicy: Always

volumes:

- configMap:

defaultMode: 420

name: logstash-growth-prd

name: conf

- hostPath:

path: /usr/share/zoneinfo/Asia/Calcutta

type: ""

name: time

filebeat

$ cat config/app-config.yaml

apiVersion: v1

data:

TZ: Asia/Calcutta

APP_NAMESPACE: taskcenter-prd

APP_ENV: prod

APP_JVM_CONFIG: |-

-Dfile.encoding=utf-8

-server

-XX:+UseG1GC

-XX:+ExitOnOutOfMemoryError

-XX:InitialRAMPercentage=75.0

-XX:MinRAMPercentage=75.0

-XX:MaxRAMPercentage=75.0

-XX:+HeapDumpOnOutOfMemoryError

-XX:HeapDumpPath=/opt/logs/

PreStop.sh: |-

#! /bin/bash

sleep 50

curl --connect-timeout 5 --max-time 5 -s -i -H "Content-Type: application/json" -X POST http://localhost:8099/actuator/shutdown

sleep 60

PreStop_task.sh: |-

#! /bin/bash

sleep 50

curl --connect-timeout 5 --max-time 5 -s -i "http://localhost:8080/xxljob/shutdown"

sleep 20

curl --connect-timeout 5 --max-time 5 -s -i -H "Content-Type: application/json" -X POST http://localhost:8099/actuator/shutdown

sleep 40

app-config.yml: |-

- type: log

scan_frequency: 10s

paths:

- '/data/logs/${POD_NAME}/*/*.log'

fields:

service: ${SERVICE_NAME}

kafka_topic: ${KAFKA_TOPIC_NAME}

# LOG_TYPE: 'dc'

# POD_IP: '${POD_IP}'

# POD_NAME: '${POD_NAME}'

# NODE_NAME: '${NODE_NAME}'

# POD_NAMESPACE: '${POD_NAMESPACE}'

# json.overwrite_keys: true

# json.keys_under_root: true

force_close_files: true

fields_under_root: true

ignore_older: 24h # 忽略1天前的日志

clean_inactive: 25h

close_inactive: 20m

exclude_lines: ['DEBUG']

exclude_files: ['.gz$', 'gc.log']

tags: ['taskcenter']

multiline.pattern: '^([0-9]{4}-[0-9]{2}-[0-9]{2}[[:space:]][0-9]{2}:[0-9]{2}:[0-9]{2}\.[0-9]{3}[[:space:]]\[)'

multiline.negate: true

multiline.match: after

filebeat.yml: |-

filebeat.config.inputs:

path: ${path.config}/conf.d/app-config.yml

enable: true

reload.enabled: true

reload.period: 10s

max_bytes: 20480 # 单条日志限制20kb

# ============================== Filebeat modules ==============================

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

reload.period: 10s

# =================================== Filebeat output ===================================

output.kafka:

enabled: true

hosts: ["kafka.xxx.com:9094"]

topic: '%{[kafka_topic]}'

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip

max_message_bytes: 1000000

# =================================== Queue ===================================

max_procs: 1

queue.mem:

events: 2048

flush.min_events: 1024

flush.timeout: 2s

kind: ConfigMap

metadata:

name: app-config

namespace: taskcenter

日志容器

$ cat task-center-web/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: task-center-web

department: taskcenter

env: prod

name: task-center-web

namespace: taskcenter

spec:

replicas: 4

selector:

matchLabels:

app: task-center-web

department: taskcenter

env: prod

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

annotations:

prometheus.io/path: /actuator/prometheus

prometheus.io/port: "10081"

prometheus.io/scrape: "true"

labels:

app: task-center-web

department: taskcenter

env: prod

spec:

terminationGracePeriodSeconds: 90

nodeSelector:

type: paltform-growth-arm

topologySpreadConstraints:

- maxSkew: 2

topologyKey: eks.amazonaws.com/capacityType

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

app: task-center-web

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

labelSelector:

matchLabels:

app: task-center-web

namespaces:

- taskcenter

topologyKey: kubernetes.io/hostname

weight: 1

#nodeAffinity:

# preferredDuringSchedulingIgnoredDuringExecution:

# - weight: 100

# preference:

# matchExpressions:

# - key: eks.amazonaws.com/capacityType

# operator: In

# values:

# - SPOT

initContainers:

- image: ops-harbor.xxx.com/devops/skywalking-agent:vv8.7.0

name: skywalking-sidecar

imagePullPolicy: Always

command: ['sh']

args: ['-c', 'mkdir -p /skywalking && /bin/cp -rf /opt/skywalking/agent /skywalking']

volumeMounts:

- mountPath: /skywalking

name: skywalking

containers:

- args:

- -javaagent:/opt/skywalking/agent/skywalking-agent.jar

- -server

- -XX:+HeapDumpOnOutOfMemoryError

- -XX:HeapDumpPath=logs/

- -Xms6g

- -Xmx6g

- -XX:G1HeapRegionSize=16m

- -XX:G1ReservePercent=25

- -XX:InitiatingHeapOccupancyPercent=30

- -XX:SoftRefLRUPolicyMSPerMB=0

- -verbose:gc

- -Xloggc:logs/$(POD_NAME)/gc.log

- -XX:+PrintGCDetails

- -XX:+PrintGCDateStamps

- -XX:+PrintGCApplicationStoppedTime

- -XX:+PrintAdaptiveSizePolicy

- -XX:+UseGCLogFileRotation

- -XX:NumberOfGCLogFiles=5

- -XX:GCLogFileSize=30m

- -XX:-OmitStackTraceInFastThrow

- -XX:+AlwaysPreTouch

- -XX:MaxDirectMemorySize=512m

- -XX:-UseLargePages

- -XX:LargePageSizeInBytes=128m

- -XX:+UseFastAccessorMethods

- -XX:-UseBiasedLocking

- -Dfile.encoding=UTF-8

- -Dspring.profiles.active=k8sprod

- -XX:+DisableExplicitGC

- -jar

- /opt/task-center-web/task-center-web.jar

command:

- java

env:

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: SW_AGENT_NAMESPACE

valueFrom:

configMapKeyRef:

name: app-config

key: APP_NAMESPACE

- name: SW_AGENT_NAME

value: "taskcenter-prd-task-center-web"

- name: SW_LOGGING_DIR

value: "/opt/skywalking/logs"

- name: SW_AGENT_COLLECTOR_BACKEND_SERVICES

value: "ops-public-skywalking:11800"

image: ops-harbor.xxx.com/taskcenter/task-center-web:dev_20221226_141335

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /bin/bash

- -c

- curl -X PUT "http://nacos-0.nacos.base.svc.cluster.local:8848/nacos/v1/ns/instance?serviceName=task-center-web&groupName=FENCE_GROUP_PROD&ip=${POD_IP}&port=18081&namespaceId=f043157a-7d73-4f9c-99c0-d90de4db8163&enabled=false"

&& sleep 60

startupProbe:

httpGet:

path: /taskcenter/health/check

port: 18081

scheme: HTTP

periodSeconds: 10

failureThreshold: 50

successThreshold: 1

timeoutSeconds: 2

livenessProbe:

failureThreshold: 5

httpGet:

path: /taskcenter/health/check

port: 18081

scheme: HTTP

initialDelaySeconds: 20

periodSeconds: 30

successThreshold: 1

timeoutSeconds: 3

name: task-center-web

ports:

- containerPort: 18081

protocol: TCP

readinessProbe:

failureThreshold: 5

httpGet:

path: /taskcenter/health/check

port: 18081

scheme: HTTP

initialDelaySeconds: 20

periodSeconds: 30

successThreshold: 1

timeoutSeconds: 3

resources:

limits:

cpu: "2.5"

memory: 7.5Gi

requests:

cpu: "1"

memory: 7.5Gi

volumeMounts:

- mountPath: /opt/task-center-web/logs

name: logs

- mountPath: /etc/localtime

name: time

- mountPath: /opt/skywalking

name: skywalking

- env:

- name: TZ

valueFrom:

configMapKeyRef:

name: app-config

key: TZ

- name: NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: KAFKA_TOPIC_NAME

valueFrom:

configMapKeyRef:

name: app-config

key: APP_NAMESPACE

- name: SERVICE_NAME

value: "task-center-web"

image: docker.elastic.co/beats/filebeat:8.5.2

imagePullPolicy: IfNotPresent

name: filebeat

resources:

limits:

cpu: 100m

memory: 200Mi

requests:

cpu: 10m

memory: 10Mi

volumeMounts:

- mountPath: /usr/share/filebeat/filebeat.yml

name: filebeat-config

subPath: filebeat.yml

- mountPath: /usr/share/filebeat/conf.d

name: filebeat-config

- mountPath: /data/logs

name: logs

imagePullSecrets:

- name: docker-pull

securityContext:

runAsUser: 0

volumes:

- configMap:

defaultMode: 511

name: app-config

name: app-config

- configMap:

defaultMode: 420

items:

- key: filebeat.yml

path: filebeat.yml

- key: app-config.yml

path: app-config.yml

name: app-config

name: filebeat-config

- hostPath:

path: /usr/share/zoneinfo/Asia/Calcutta

type: ""

name: time

- emptyDir: {}

name: logs

- emptyDir: {}

name: skywalking