Linux: Openstack

TAGS: Linux

OpenStack基础

- 虚拟化基础知识

- OpenStack的出现

- OpenStack基础架构

OpenStack基础架构

组件

Nova #提供计算 Glance #提供镜像 Swift #提供存储(对象存储) Horizon #Web界面 Keystore #认证 Neutron #网络 Cinder #存储(块存储) Heat #虚拟机编排 Ceilometer #计费 Trove #数据库充当服务 Sahara Ironic Zaqa Manila Desinate Barbican ... ...

结构

控制端: controller1, controller2 #如果是物理机使用kvm\vmware\xen实现控制端 haproxy: 1, 2 #访问入口 mysql, rabitmq, memecached, controller mysql: 主从 NAS: 存储镜像 Node节点: node1, node2, node3 #内部网卡,外部网卡

1.基础环境安装

官方文档:https://docs.openstack.org/install-guide/environment.html

2.组件安装

官方文档-最小化组件安装:https://docs.openstack.org/install-guide/openstack-services.html

- Identity service – keystone

- Image service – glance

- Placement service – placement

- Compute service – nova

- Networking service – neutron

- Dashboard – horizon

- Block Storage service – cinder

实践环节

172.31.0.0/21 172.31.0.0 172.31.1.0 172.31.2.0 172.31.3.0 172.31.4.0 172.31.5.0 172.31.6.0 172.31.7.0 10.10.0.0/21 10.10.1.0 10.10.2.0 10.10.3.0 10.10.4.0 10.10.5.0 10.10.6.0 10.10.7.0 #controller 172.31.7.101 #controller1 10.10.7.101 172.31.7.102 #controller2 10.10.7.102 #mysql 172.31.7.103 #mysql-master , rabbitmq, memcached #haproxy+keepalive 172.31.7.105 #ha1 vip 172.31.7.248 172.31.7.106 #ha2 #openstack-vip.online.local #vip #node 172.31.7.107 #node1 10.10.7.107 172.31.7.108 #node2 10.10.7.108 172.31.7.109 #node3 10.10.7.109 #openstack-vip.online.local #node节点域名解析/etc/hosts vip

OpenStack实验环境搭建

主要内容

- 基础环境安装

- 操作系统选择

- 网络架构选择

- NTP校时和操作系统基本配置

- OpenStack基础包

- 数据库和消息队列

- 最小化组件安装

- OpenStack核心服务简介

- OpenStack核心服务安装

- 认证服务KeyStone安装

- 归置服务Placement安装

- 镜像服务Glance安装

- 网络服务Neutron安装

- 存储服务Cinder安装

- 计算服务nova安装

- 面板服务Horizon安装

- OpenStack计算节点配置

- 计算节点的基本服务类型

- 计算节点的网络

- 计算节点的安装和配置

基础环境安装

操作系统

网卡eth0, eth1

TYPE=Ethernet BOOTPROTO=static NAME=eth0 ONBOOT=yes IPADDR=172.31.7.101 #102 ... PRFIX=21 GATEWAY=172.31.7.254 DNS1=223.6.6.6.6 TYPE=Ethernet BOOTPROTO=static NAME=eth0 ONBOOT=yes IPADDR=10.10.7.101 #102 ... PRFIX=21 GATEWAY=10.10.7.254 DNS1=223.6.6.6.6

#关闭防火墙 #关闭SELinux #安装常用命令 #主机名 #时间 ntpdate time1.aliyun.com; hwclock -w #时区

安装openstack包

提前做好自建包仓库,避免后期无安装。

node节点和controller节点安装

#使用原始的源,extras存储库提供了openstack存储库的RPM, 直接安装即可 yum list centos-release-openstack* yum install centos-release-openstack-<release> #contrller, node, mysql安装 #RDO dnf install https://www.rdoproject.org/repos/rdo-release.el9.rpm #contrller, node, mysql安装 dnf install python3-openstackclient openstack-selinux

SQL database

mysql物理机172.31.7.103

yum install mariadb mariadb-server python2-PyMySQL #controller端也需要安装python2-PyMySQL

/etc/my.cnf.d/openstack.cnf

[mysqld] bind-address = 0.0.0.0 default-storage-engine = innodb innodb_file_per_table = on max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8

启动

systemctl enable mariadb.service systemctl start mariadb.service

Message queue

消息队列

rabbitmq物理机173.31.7.103

/etc/hosts 增加主机全名。

yum install rabbitmq-server systemctl enable rabbitmq-server.service systemctl start rabbitmq-server.service

变更

RABBIT_PASS: oepnstack123

创建rabbitmq账号

rabbitmqctl add_user openstack openstack123 rabbitmqctl set_permissions openstack ".*" ".*" ".*"

管理界面

rabbitmq-plugins list rabbitmq-plugins enable rabbitmq_management

访问: 172.31.7.103:15672 guest/guest

Memcached

Memcached物理机173.31.7.103

yum install memcached python3-memcached #controller 节点安装 python3-memcached

/etc/sysconfig/memcached

PORT="11211" USER="memcached" MAXCONN="1024" CACHESIZE="1024" OPTIONS="-l 0.0.0.0,::1"

systemctl enable memcached.service systemctl start memcached.service

Haproxy+keepalived

HA物理机172.31.7.105-106

yum install -y haproxy keepalived #keepalived vim /etc/keepalived/keepalived.conf global_defs { notification_email { [email protected] [email protected] [email protected] } notification_email_from [email protected] smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL vrrp_skip_check_adv_addr vrrp_strict #开启限制,会自动生效防火墙设置,导致无访问VIP vrrp_iptables #此项和vrrp_strict同时开启时,则不会添加防火墙规则,如果无配置 vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_instance VI_1 { state MASTER #另一个为BACKUP interface eth0 virtual_router_id 58 #修改此行 priority 100 #另一个为80 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.31.7.248 dev eth0 label eth0:0 } } #haproxy vim /etc/haproxy/haproxy.cfg ... ... listen openstack-mysql-3306 bind 172.31.7.248:3306 mode tcp server 172.31.7.103 172.31.7.103:3306 check inter 3s fall 3 rise 5 listen openstack-rabbitmq-5672 bind 172.31.7.248:5672 mode tcp server 172.31.7.103 172.31.7.103:5672 check inter 3s fall 3 rise 5 listen openstack-memcached-11211 bind 172.31.7.248:11211 mode tcp server 172.31.7.103 172.31.7.103:11211 check inter 3s fall 3 rise 5 systemctl enable haproxy keepalived systemctl restart haproxy keepalived

所有node节点VIP dns解析

echo "172.31.7.248 openstack-vip.online.local" >> /etc/hosts

最小化组件安装

- Identity service – keystone

- Image service – glance

- Placement service – placement

- Compute service – nova

- Networking service – neutron

- Dashboard – horizon

- Block Storage service – cinder

变更

DEMO_PASS METADATA_SECRET RABBIT_PASS: openstack123 controller: openstack-vip.online.local KEYSTONE_DBPASS: keystone123 GLANCE_DBPASS: glance123 GLANCE_PASS: glance PLACEMENT_PASS: placement123 NOVA_DBPASS: nova123 NOVA_PASS: nova NEUTRON_DBPASS: neutron123 NEUTRON_PASS: neutron DASH_DBPASS: dash123 ADMIN_PASS: admin CINDER_DBPASS: cinder123 CINDER_PASS: cinder #cinder密码: cinder MANAGEMENT_INTERFACE_IP_ADDRESS: 本地ip

admin-openrc

cat <<\EOF> admin-openrc export OS_PROJECT_DOMAIN_NAME=Default export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_NAME=admin export OS_USERNAME=admin export OS_PASSWORD=admin export OS_AUTH_URL=http://openstack-vip.online.local:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 EOF

网络

- admin: 管理网络

- internal: 内部网络

- public: 共有网络

#bridge_mappings = provider:PROVIDER_BRIDGE_NAME bridge_mappings = external:eth0,internal:eth1

添加openstack controller节点

#源 #https://docs.openstack.org/install-guide/environment-packages.html ### CentOS Stream 9 dnf install centos-release-openstack-<release> dnf install python3-openstackclient openstack-selinux #插件包 #https://docs.openstack.org/install-guide/environment-sql-database.html #https://docs.openstack.org/install-guide/environment-memcached.html yum install -y python2-PyMySQL python-memcached #VIP dns解析 echo "172.31.7.248 openstack-vip.online.local" >> /etc/hosts #keystone #https://docs.openstack.org/keystone/2024.2/install/ dnf install openstack-keystone httpd python3-mod_wsgi ##到已安装完成的controller节点打包keystone配置,推送至新节点 cd /etc/keystone ; tar czvf keystone-controller.tar.gz ./* scp keystone-controller.tar.gz 172.31.7.102:/etc/keystone/ ##新节点解压 cd /etc/keystone; tar xf keystone-controller.tar.gz -C /etc/keystone/ ##Apache HTTP server 编辑/etc/httpd/conf/httpd.conf ServerName 172.31.102:80 ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/ systemctl enable --now httpd.service ##验证 #haproyx 配置添加新keystone openstack user list #glance #https://docs.openstack.org/glance/2024.2/install/ yum install openstack-glance mkdir /var/lib/glance/images chown glance.glance /var/lib/glance/images -R echo '172.31.7.105:/data/glance /var/lib/glance/images nfs defaults,_netdev 0 0' >>/etc/fstab mount -a ##到已安装完成的controller节点打包glance配置,推送至新节点 cd /etc/glance ; tar czvf glance-controller.tar.gz ./* scp glance-controller.tar.gz 172.31.7.102:/etc/glance/ ##新节点解压 cd /etc/glance; tar xf glance-controller.tar.gz -C /etc/glance/ systemctl enable --now openstack-glance-api.service tail -f /var/log/glance/api.log ##haproxy配置文件添加glance配置 #placement #https://docs.openstack.org/placement/2024.2/install/ yum install openstack-placement-api ##到已安装完成的controller节点打包placement配置,推送至新节点 cd /etc/placement ; tar czvf placement-controller.tar.gz ./* scp placement-controller.tar.gz 172.31.7.102:/etc/placement/ ##新节点解压 cd /etc/placement; tar xf placement-controller.tar.gz -C /etc/placement/ systemctl restart httpd tail -f /var/log/placement/placement-api.log ##haproxy配置文件添加placement配置 placemnet-status upgrade check #nova #https://docs.openstack.org/nova/2024.2/install/controller-install.html yum -y install openstack-nova-api openstack-nova-conductor \ openstack-nova-novncproxy openstack-nova-scheduler ##到已安装完成的controller节点打包nova配置,推送至新节点 cd /etc/nova ; tar czvf nova-controller.tar.gz ./* scp nova-controller.tar.gz 172.31.7.102:/etc/nova/ ##新节点解压 cd /etc/nova; tar xf nova-controller.tar.gz -C /etc/nova/ ##vim nova.conf 修改server_listen, server_proxyclient_address地址为本机IP ##拷贝controller环境变量 scp admin-openrc demo-openrc 172.31.7.102:/root/ systemctl enable --now \ openstack-nova-api.service \ openstack-nova-scheduler.service \ openstack-nova-conductor.service \ openstack-nova-novncproxy.service tail -f /var/log/nova/*.log ##haproxy配置文件添加nova配置 source admin-openrc nova service-list #neutron #https://docs.openstack.org/neutron/2024.2/install/ #https://docs.openstack.org/neutron/2024.2/install/controller-install-option1-rdo.html yum install openstack-neutron openstack-neutron-ml2 \ openstack-neutron-openvswitch ##到已安装完成的controller节点打包neutron配置,推送至新节点 cd /etc/neutron ; tar czvf neutron-controller.tar.gz ./* scp neutron-controller.tar.gz 172.31.7.102:/etc/neutron/ ##新节点解压 cd /etc/neutron; tar xf neutron-controller.tar.gz -C /etc/neutron/ systemctl enable neutron-server.service \ neutron-openvswitch-agent.service neutron-dhcp-agent.service \ neutron-metadata-agent.service systemctl start neutron-server.service \ neutron-openvswitch-agent.service neutron-dhcp-agent.service \ neutron-metadata-agent.service tail -f /var/log/neutron/*.log ##haproxy配置文件添加neutron配置 neutron agent-list #dashboard #https://docs.openstack.org/horizon/2024.2/install/ yum install -y openstack-dashboard ##到已安装完成的controller节点打包dashboard配置,推送至新节点 cd /etc/openstack-dashboard/ ; tar czvf openstack-dashboard-controller.tar.gz ./* scp dashboard-controller.tar.gz 172.31.7.102:/etc/openstack-dashboard/ ##新节点解压 cd /etc/openstack-dashboard; tar xf openstack-dashboard-controller.tar.gz -C /etc/openstack-bashboard/ ##vim local_settings 修改ALLOWED_HOSTS, OPENSTACK_HOST地址为本机IP systemctl restart httpd tail -f /var/log/httpds/*.log ##haproxy配置文件添加openstack-dashboard配置

haproxy配置

... ... listen openstack-mysql-3306 bind 172.31.7.248:3306 mode tcp server 172.31.7.103 172.31.7.103:3306 check inter 3s fall 3 rise 5 listen openstack-rabbitmq-5672 bind 172.31.7.248:5672 mode tcp server 172.31.7.103 172.31.7.103:5672 check inter 3s fall 3 rise 5 listen openstack-memcached-11211 bind 172.31.7.248:11211 mode tcp server 172.31.7.103 172.31.7.103:11211 check inter 3s fall 3 rise 5 listen openstack-keystone-5000 bind 172.31.7.248:5000 mode tcp server 172.31.7.101 172.31.7.101:5000 check inter 3s fall 3 rise 5 server 172.31.7.102 172.31.7.102:5000 check inter 3s fall 3 rise 5 listen openstack-glance-9292 bind 172.31.7.248:9292 mode tcp server 172.31.7.101 172.31.7.101:9292 check inter 3s fall 3 rise 5 server 172.31.7.102 172.31.7.102:9292 check inter 3s fall 3 rise 5 listen openstack-placement-8778 bind 172.31.7.248:8778 mode tcp server 172.31.7.101 172.31.7.101:8778 check inter 3s fall 3 rise 5 server 172.31.7.102 172.31.7.102:8778 check inter 3s fall 3 rise 5 listen openstack-nova-controller-8774 bind 172.31.7.248:8774 mode tcp server 172.31.7.101 172.31.7.101:8774 check inter 3s fall 3 rise 5 server 172.31.7.102 172.31.7.102:8774 check inter 3s fall 3 rise 5 listen openstack-nova-controller-8775 bind 172.31.7.248:8775 mode tcp server 172.31.7.101 172.31.7.101:8775 check inter 3s fall 3 rise 5 server 172.31.7.102 172.31.7.102:8775 check inter 3s fall 3 rise 5 listen openstack-nova-novncproxy-6080 bind 172.31.7.248:6080 mode tcp server 172.31.7.101 172.31.7.101:6080 check inter 3s fall 3 rise 5 server 172.31.7.102 172.31.7.102:6080 check inter 3s fall 3 rise 5 listen openstack-neutron-9696 bind 172.31.7.248:9696 mode tcp server 172.31.7.101 172.31.7.101:9696 check inter 3s fall 3 rise 5 server 172.31.7.102 172.31.7.102:9696 check inter 3s fall 3 rise 5 listen openstack-dashboard-80 bind 172.31.7.248:80 mode tcp server 172.31.7.101 172.31.7.101:80 check inter 3s fall 3 rise 5 server 172.31.7.102 172.31.7.102:80 check inter 3s fall 3 rise 5

OpenStack计算节点配置

快速添加openstack node节点

#时间同步 /usr/sbin/update time1.aliyun.com && hwclock -w #yum源 #https://docs.openstack.org/install-guide/environment-packages-rdo.html dnf install centos-release-openstack-<release> dnf install python3-openstackclient openstack-selinux #安装nova #https://docs.openstack.org/nova/2024.2/install/compute-install.html yum install openstack-nova-compute -y #到已安装完成的node节点打包nova配置,推送至新节点 cd /etc/nova ; tar czvf nova-computer.tar.gz ./* scp /etc/nova/nova-computer.tar.gz 172.31.7.108:/etc/nova/ #新节点解压 cd /etc/nova; tar xf nova-computer.tar.gz -C /etc/nova #修改nova.conf中,server_proxyclient_address = <当前node IP地址> #VIP dns解析 echo "172.31.7.248 openstack-vip.oneline.local" >> /etc/hosts #启动脚本 cat <<\EOF> nova-restart.sh #!/bin/bash systemctl restart openstack-nova-compute.service EOF # systemctl start libvirtd openstack-nova-compute # systemctl enable libvirtd openstack-nova-compute #neutron安装 #https://docs.openstack.org/neutron/2024.2/install/compute-install-rdo.html yum install openstack-neutron-openvswitch -y #到已安装完成的node节点打包neutron配置,推送至新节点 cd /etc/neutron ; tar czvf neutron-computer.tar.gz ./* # scp neutron-compute.tar.gz 172.31.7.108:/etc/neutron/ #新节点解压 cd /etc/neutron; tar xf neutron-computer.tar.gz -C /etc/neutron #内核参数 cat <<\EOF> /etc/sysctl.conf net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 EOF #启动脚本 cat <<\EOF> neutron-restart.sh systemctl restart neutron-openvswitch-agent.service EOF systemctl enable neutron-openvswitch-agent.service systemctl start neutron-openvswitch-agent.service #启动节点 reboot 1.在脚本的当前目录,需要有nova和neutron的配置压缩包 2.内核参数和资源限制 sysctl.conf 和 limits.conf 3.验证 neutron agent-list nova service-list

OpenStack镜像服务Glance详解

主要内容

- Glance服务架构体系

- Glance镜像管理操作

- OpenStack虚拟机镜像制作

OpenStack虚拟机镜像制作

制作镜像官方文档:https://docs.openstack.org/image-guide/

在宿主机最小化安装系统并配置优化,做完成然后将虚拟机关机,将虚拟机磁盘文件上传至glance即可启动虚拟机。

CentOS7+和Ubuntu

#安装基础环境 yum install -y qemu-kvm qemu-kvm-tools libvirt virt-manager virt-install #创建磁盘 qemu-img create -f qcow2 /var/lib/libvirt/images/CentOS-7-x86_64.qcow2 10G qemu-img create -f qcow2 /var/lib/libvirt/images/ubuntu-2410.qcow2 10G #安装 virt-install --virt-type kvm --name CentOS-x86_64 --ram 1024 --cdrom=/opt/CentOS-7-x86_64-Minimal-1511.iso \ --disk path=/var/lib/libvirt/images/CentOS-7-x86_64.qcow2 --network bridge=br0 --graphics vnc,listen=0.0.0.0 \ --noautoconsole virt-install --virt-type kvm --name ubuntu-2410 --ram 1024 --cdrom=/opt/ubuntu-24.10-live-server-amd64.iso \ --disk path=/var/lib/libvirt/images/ubuntu-2410.qcow2 --network bridge=br0 --graphics vnc,listen=0.0.0.0 \ --noautoconsole #vnc客户端连接并安装 #vnc viewer IP:5900 #系统初始化,ssh-key密钥 #关机,复制镜像至控制端 cd /var/lib/libvirt/images scp -P22 CentOS-7-x86_64.qcow2 172.31.7.101:/root/ #控制端上传镜像至glance source admin-openrc openstack image create "CentOS-7-x86_64-template" --file /root/CentOS-7-x86_64.qcow2 --disk-format qcow2 \ --container-format bare --public #验证 openstack iamge list #创建虚拟机

CentOS虚拟机中初始化

#除基础的初始化外 #放置公钥 #对虚拟机进行重启 yum install acpid systemctl enable acpid #cloud-init可以访问openstack api, 可以设置元数据\拉抻磁盘空间 yum install cloud-init vim /etc/cloud/cloud.cfg users: - name: root disable_root: false systemctl disable cloud-init #启动较慢。可以openstack启动实例后,再启动。不启动无法拉抻磁盘空间 yum install cloud-utils-growpart

范例:导入openstack SSH公钥

#https://docs.openstack.org/install-guide/launch-instance.html#generate-a-key-pair #创建ssh密钥 . demo-openrc openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey openstack keypair list #列出网络id openstack network list #列出可用安全组 openstack security group list #命令行创建虚拟机,并导入SSH公钥 openstack server create --flavor m1.nano --image cirros \ --nic net-id=PROVIDER_NET_ID --security-group default \ --key-name mykey vm1111 #进入vm111查看是否有ssh公钥信息

范例:重设官方镜像磁盘中root密码

#官方镜像是ssh密钥登录的,并不知道root密码 yum install libguestfs-tools -y #展开磁盘文件,修改密码 virt-customize -a /var/lib/libvirt/images/CentOS-7-x86_64-GenericCloud-1511.qcow2 --root-password password:123456

范例:centos6.9拉抻磁盘空间

#centos6 在openstack中的磁盘拉抻问题 #https://github.com/flegmatik/linux-rootfs-resize yum install epel-release -y yum install git parted cloud-utils -y git clone https://github.com/flegmatik/linux-rootfs-resize.git cd linux-rootfs-resize ./install #网卡名问题 vim /etc/udev/rules.d/70-persistent-net.rules #保留最新网卡信息行,eth1为eth0, 修改完,重启生效

OpenStack网络服务Neutron详解

主要内容

- Neutron组件架构和模型

- Neutron Plugin和Agent

- 基本网络类型介绍和管理

实现内外网结构

控制节点配置

vim /etc/neutron/plugins/ml2/ml2_conf.ini [ml2_type_flat] # ... flat_networks = external,internal vim /etc/neutron/plugins/ml2/openvswitch_agent.ini [ovs] bridge_mappings = external:eth0, internal:eth1 #重启neutron cat <<\EOF> neutron-restart.sh systemctl restart neutron-openvswitch-agent.service EOF bash neutron-restart.sh

计算节点配置

vim /etc/neutron/plugins/ml2/openvswitch_agent.ini [ovs] bridge_mappings = external:eth0, internal:eth1 #重启neutron cat <<\EOF> neutron-restart.sh systemctl restart neutron-openvswitch-agent.service EOF bash neutron-restart.sh

创建网络并验证

#控制端创建网络 source admin-openrc neutron net-create --shared --provider:physical_network internal --provider:network_type flat internal-net openstack network list #创建子网 neutron subnet-create --name internal-sub --allocation-pool start=10.10.0.50,end=10.10.0.100 --dns-nameserver 223.6.6.6 internal-net 10.10.0.0/21 #验证

或者web界面网络中创建网络internal-net,再创建子网internal-sub。

创建虚拟机系统添加网卡

制作操作系统镜像,并添加一块网卡,然后上传至glance存储

web界面创建虚拟机实例时,在网络配置的中,先加的网络为eth0, 后加的eth1,内网为eth0, 外网为eth1

#验证内外网情况 ping 10.0.7.101 ping 172.31.7.101

自服务网络

类似于云厂商的VPC

Controller控制节点

Dashboard配置

https://docs.openstack.org/horizon/2024.2/install/install-rdo.html

开启3层网络的支持

#/etc/openstack-dashboard/local_settings OPENSTACK_NEUTRON_NETWORK = { ... 'enable_router': True, 'enable_quotas': True, 'enable_distributed_router': True, 'enable_ha_router': True, 'enable_fip_topology_check': True, } systemctl restart httpd

创建vpc网络

source admin-openrc #使用admin环境 openstack network create admin-net #创建admin用户网络 openstack subnet create --network admin-net --dns-nameserver 223.5.5.5 --gateway 10.20.0.0/22 --subnet-range 10.20.0.0/22 admin-sube #创建子网 openstack router create admin-router #创建路由, 用于地址转换 neutron router-interface-add admin-router admin-sube #将子网关联到路由 neutron router-gateway-set admin-router external-net #将路由关联到外网 source script/demo.sh #使用指定用户环境变量执行 openstack network create myuser-net #创建demo用户网络 openstack subnet create --network myuser-net --dns-nameserver 223.5.5.5 --gateway 10.20.3.254 --subnet-range 10.20.0.0/22 myuser-sube #创建子网 openstack router create myuser-router #创建路由 neutron router-interface-add myuser-router myuser-sube #将子网关联到路由 neutron router-gateway-set myuser-router external-net #将路由关联到外网 #也可在web界面网络中手动操作

自服务网络实例如果需要从外部访问,可以绑定浮动IP. 通过管理界面创建浮动IP绑定到对应实例。

2个自服务网络实例之间通信,需要在路由器上添加接口

#命令行 neutron router-interface-add myuser-router xxxx #将子网关联到路由

OpenStack计算服务详解

主要内容

- Nova基本架构体系

- Nova各组件功能介绍

- 虚拟机管理

- 基本管理操作

- 虚拟机迁移

- 虚拟机救援

指定虚拟机IP

CentOS-7.6_v1 1C-1G-50G projectB: openstack-node2.online.local openstack-node3.online.local #给虚拟机指定单个IP nova boot --image CentOS-7.6_v1 --flavor 1C-1G-50G \ --availability-zone projectB:openstack-node2.online.local --nic net-name=external-net,v4-fixed-ip=172.31.7.88 projectB-app1 #给虚拟机指定多个IP: nova boot --flavor=1C-1G-50G \ --image=CentOS-7.6_v1 \ --availability-zone projectB:openstack-node2.online.local \ --nic net-id=16d4c3ee-7c91-4fc4-8b74-3afcfa992108,v4-fixed-ip=172.31.7.88 \ --nic net-id=56a614a5-b5c1-4aa4-8553-f50ef8363a07,v4-fixed-ip=10.10.7.88 \ projectB-app1

优化配置

修改当前资源配额

查看当前配额

neutron quta-show admin neutron port-list

方式1-修改配置文件

#各个节点修改openstack整体的port或者其他限制 vim /etc/neutron/neutron.conf [quotas] quota_network = 10 quota_subnet = 10 quota_port = 50 quota_driver = neutron.db.quta.driver.DbQuotaDriver quota_router = 10 quota_floatingip = 1000 quota_security_group_rule = 100 #重启服务 systemctl restart openstack-nova-api neutron-server

方式2-启动web修改配额

#启动web修改配额 vim /etc/openstack-dashboard/local_settings 'enable_quotas': True systemctl restart httpd

在web界面,项目–>admin–>修改配额

方式3-命令行修改所有项目的默认资源限制

nova quota-class-update default --instances 20 nova quota-class-update default --ram 81920 nova quota-class-update default --cores 80

配置虚拟机自动启动

控制端和计算节点的/etc/nova/nova.conf

resume_guests_state_on_host_boot=true

配置CPU超限使用

默认为16,即允许开启16位于物理机CPU的虚拟CPU核数

cpu_allocation_ratio=16

配置内存超限使用

配置允许1.5位于物理机内存的虚拟内存

ram_allocation_ratio=1.5

配置磁盘超限使用

磁盘尽量不要超限,因为可能会导致数据丢失

disk_allocation_ratio=1.0

配置保留磁盘空间

即会预留一部分磁盘空间给系统使用

reserved_host_disk_mb=20480

配置预留内存给系统使用

预留一定的内存给系统使用

reserved_host_memory_mb=4086

配置虚拟机类型动态调整

在有些时候,创建完成的虚拟机因业务需求需要变更内存或磁盘,因此需要配置允许后期类型调整

控制端和计算节点的/etc/nova/nova.conf

allow_resize_to_same_host=true

1.各计算节点配置nova用户

#将shell改为/bin/bash usermod nova -s /bin/bash

2.配置nova登录密码

echo xxxx |passwd --stdin nova

3.切换至nova用户获取密钥

su - nova ssh-keygen -t rsa -P '' -f ~/.ssh/id_dsa &>/dev/null #将密钥相互copy至各计算节点 ssh-copy-id -i ~/.ssh/id_dsa.pub [email protected] ssh x.x.x.x #必须确认可以正常登录

4.web管理界面

虚拟机类型调整,虚拟机会关机漂移到其他计算节点。

OpenStack存储服务Cinder详解

主要内容

- Cinder基本架构体系

- Cinder调度算法

- 存储卷管理操作

类似云盘(存储卷)

性能一般,不推荐使用。

安装

官方cinder安装文档: https://docs.openstack.org/cinder/2024.2/install/

Controller节点

变更

CINDER_DBPASS: cinder123 cinder密码: cinder controller: openstack-vip.online.local RABBIT_PASS: openstack123

安装过程-先决条件

#1 mysql -u root -p MariaDB [(none)]> CREATE DATABASE cinder; MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'cinder123'; #2 . admin-openrc #3 openstack user create --domain default --password-prompt cinder User Password: cinder Repeat User Password: cinder openstack role add --project service --user cinder admin openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3 #4 openstack endpoint create --region RegionOne \ volumev3 public http://openstack-vip.online.local:8776/v3/%\(project_id\)s openstack endpoint create --region RegionOne \ volumev3 internal http://openstack-vip.online.local:8776/v3/%\(project_id\)s openstack endpoint create --region RegionOne \ volumev3 admin http://openstack-vip.online.local:8776/v3/%\(project_id\)s #验证 openstack endpoint list

haproxy配置

listen openstack-cinder-8776

bind 172.31.7.248:8776

mode tcp

server 172.31.7.101 172.31.7.101:8776 check inter 3s fall 3 rise 5

server 172.31.7.102 172.31.7.102:8776 check inter 3s fall 3 rise 5

systemctl reload haproxy

安装过程-安装服务和配置

#1 yum install openstack-cinder -y #2 /etc/cinder/cinder.conf [database] # ... connection = mysql+pymysql://cinder:[email protected]/cinder [DEFAULT] # ... transport_url = rabbit://openstack:[email protected] auth_strategy = keystone my_ip = 10.0.0.11 [keystone_authtoken] # ... www_authenticate_uri = http://openstack-vip.online.local:5000 auth_url = http://openstack-vip.online.local:5000 memcached_servers = openstack-vip.online.local:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = cinder password = cinder [oslo_concurrency] # ... lock_path = /var/lib/cinder/tmp #4 初始数据库 su -s /bin/sh -c "cinder-manage db sync" cinder #验证 查看cinder库是否有表 #配置/etc/nova/nova.conf [cinder] os_region_name = RegionOne #最后 systemctl restart openstack-nova-api.service systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

存储节点

找一台物理机安装

安装yum源

yum list *openstack* yum install centos-release-openstack-<release>

安装步骤-前提条件

yum install lvm2 device-mapper-persistent-data systemctl enable lvm2-lvmetad.service systemctl start lvm2-lvmetad.service #2创建lvm pvcreate /dev/sdb vgcreate cinder-volumes /dev/sdb #安装配置 /etc/lvm/lvm.conf devices { ... filter = [ "a/sdb/", "r/.*/"] systemctl restart lvm2-lvmetad.service

变更

CINDER_DBPASS: cinder123 CINDER_PASS: cinder #cinder密码: cinder controller: openstack-vip.online.local RABBIT_PASS: openstack123 MANAGEMENT_INTERFACE_IP_ADDRESS: 本地ip

安装步骤-安装与配置

yum install openstack-cinder targetcli #/etc/cinder/cinder.conf [database] # ... connection = mysql+pymysql://cinder:[email protected]/cinder [DEFAULT] # ... transport_url = rabbit://openstack:[email protected] [DEFAULT] # ... auth_strategy = keystone [keystone_authtoken] # ... www_authenticate_uri = http://openstack-vip.online.local:5000 auth_url = http://openstack-vip.online.local:5000 memcached_servers = openstack-vip.online.local:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = cinder password = CINDER_PASS [DEFAULT] # ... my_ip = 172.31.7.103 [lvm] volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver volume_group = cinder-volumes target_protocol = iscsi target_helper = lioadm [DEFAULT] # ... enabled_backends = lvm [DEFAULT] # ... glance_api_servers = http://openstack-vip.online.local:9292 [oslo_concurrency] # ... lock_path = /var/lib/cinder/tmp systemctl enable openstack-cinder-volume.service target.service systemctl start openstack-cinder-volume.service target.service #验证 source admin-openrc openstack volume service list

重新登录web管理界面,多出了卷管理。创建卷,管理连接绑定虚拟机实例。

后期创建的虚拟机系统盘会自动用cinder, 方便后期迁移

管理类型

cinder type-create lvm cinder type-create nfs #类型关联 cinder type-key lvm set volume_backend_name=Openstack_lvm #/etc/cinder/cinder.conf [lvm] #... volume_backend_name = Openstack-lvm

NFS存储类型

172.31.7.105物理机

#安装NFS yum install nfs-utils mkdir /data/cinder vim /etc/exports /data/cinder *(rw,no_root_squash) systemctl restart nfs

配置cinder使用NFS(controller节点)

#/etc/cinder/cinder.conf enabled_backends = nfs [nfs] volume_backend_name = openstack-NFS volume_driver = cinder.volume.drivers.nfs.NfsDriver nfs_shares_config = /etc/cinder/nfs_shares nfs_mount_point_base = $stae_path/mnt #/etc/cinder/nfs_shares 172.31.7.105:/data/cinder chown root.cinder /etc/cinder/nfs_share systemctl restart openstack-cinder-api.service openstack-cinder-scheduler.service systemctl restart openstack-cinder-volume.service target.service cinder service-list #配置卷类型 cinder type-create nfs cinder type-key lvm set volume_backend_name=openstack-NFS

案例

更改实例IP地址

如果存在地址冲突,可修改实例IP

#显示实例ID openstack port list |grep x.x.x.x #查看实例ID mysql -uneutron -h172.31.7.105 -p >use neutron >select * from ports where device_id='xxxxx'; #验证虚拟机IP和ID对应关系 >select * from ipallocations where port_id="xxxx"; #修改数据库实例IP >update ipallocations set ip_address="x.x.x.x" where port_id='xxxxx'; >flush privileges; #在实例里配置新IP vim ifcfg-eth0 ... IPADDR=x.x.x.x

HAProxy及Keepalived实现高可用

不推荐,可以在直接在物理机做VIP

创建虚拟机

#给虚拟机指定过个IP: #APP 172.31.7.88-89 nova boot --flavor=1C-1G-50G \ --image=CentOS-7.6_v1 \ --availability-zone projectB:openstack-node2.magedu.local \ --nic net-id=16d4c3ee-7c91-4fc4-8b74-3afcfa992108,v4-fixed-ip=172.31.7.88 \ --nic net-id=56a614a5-b5c1-4aa4-8553-f50ef8363a07,v4-fixed-ip=10.10.7.88 \ projectB-app1 nova boot --flavor=1C-1G-50G \ --image=CentOS-7.6_v1 \ --availability-zone projectB:openstack-node1.magedu.local \ --nic net-id=16d4c3ee-7c91-4fc4-8b74-3afcfa992108,v4-fixed-ip=172.31.7.89 \ --nic net-id=56a614a5-b5c1-4aa4-8553-f50ef8363a07,v4-fixed-ip=10.10.7.89 \ projectB-app2 #HA 172.31.78-79 nova boot --flavor=1C-1G-50G \ --image=CentOS-7.6_v1 \ --availability-zone projectB:openstack-node2.magedu.local \ --nic net-id=16d4c3ee-7c91-4fc4-8b74-3afcfa992108,v4-fixed-ip=172.31.7.78 \ --nic net-id=56a614a5-b5c1-4aa4-8553-f50ef8363a07,v4-fixed-ip=10.10.7.78 \ projectB-lb1 nova boot --flavor=1C-1G-50G \ --image=CentOS-7.6_v1 \ --availability-zone projectB:openstack-node3.magedu.local \ --nic net-id=16d4c3ee-7c91-4fc4-8b74-3afcfa992108,v4-fixed-ip=172.31.7.79 \ --nic net-id=56a614a5-b5c1-4aa4-8553-f50ef8363a07,v4-fixed-ip=10.10.7.79 \ projectB-lb2

安装haproxy+keepalived

#172.31.7.78-79 yum instll haproxy keepalived -y #keepalived vim /etc/keepalived/keepalived.conf global_defs { notification_email { [email protected] [email protected] [email protected] } notification_email_from [email protected] smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL vrrp_skip_check_adv_addr vrrp_strict #开启限制,会自动生效防火墙设置,导致无访问VIP vrrp_iptables #此项和vrrp_strict同时开启时,则不会添加防火墙规则,如果无配置 vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_instance VI_1 { state MASTER #另一个为BACKUP interface eth0 virtual_router_id 55 #修改此行 priority 100 #另一个为80 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.31.7.16 dev eth0 label eth0:0 } } #haproxy vim /etc/haproxy/haproxy.cfg ... ... listen tomcat-80 bind 172.31.7.16:80 mode http server 172.31.7.78 172.31.7.78:8080 check inter 3s fall 3 rise 5 server 172.31.7.79 172.31.7.79:8080 check inter 3s fall 3 rise 5 #内核参数,允许haproxy监听不存在的ip vim /etc/sysctl.conf net.ipv4.ip_nonlocal_bind = 1

关联VIP指定实例

openstack security group list openstack network list #将VIP关联至安全组 neutron port-create --fixed-ip ip_address=172.31.7.16 --sercurity-group <安全组ID或名称> <网络ID或名称> #列出各实例portID openstack port list |grep 173.31.7.78 openstack port list |grep 173.31.7.79 #将VIP关联至实例 neutron port-update <protID78> --allowed_address_pairs list=true type=dict ip_address=172.31.7.16 neutron port-update <protID79> --allowed_address_pairs list=true type=dict ip_address=172.31.7.16 #neutron port-update <protID79> --allowed_address_pairs list=true type=dict ip_address={"172.31.7.16","172.31.7.26"} #绑定多个地址写法

安全组策略

允许keepalived 112端口进出。

LVS应用

计算节点内核参数

#如果涉及ip地址源地址转换,要开启 #允许虚拟机流量通过物理网卡 cat <<\EOF>> /etc/sysctl.conf net.ipv4.ip_forword =1 EOF #虚拟机使用的桥接可以不配

lvs虚拟机安装keepalived+lvs

yum install keepalived ipvsadm -y #keepalived配置 cat <<\EOF> /etc/keepalived/keepalived.conf global_defs { notification_email { [email protected] [email protected] [email protected] } notification_email_from [email protected] smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL vrrp_skip_check_adv_addr vrrp_strict #开启限制,会自动生效防火墙设置,导致无访问VIP vrrp_iptables #此项和vrrp_strict同时开启时,则不会添加防火墙规则,如果无配置 vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_instance VI_1 { state MASTER #另一个为BACKUP interface eth0 virtual_router_id 55 #修改此行 priority 100 #另一个为80 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.31.7.16 dev eth0 label eth0:0 } } #Nginx service virtual_server virtual_server 172.31.7.16 80 { #VIP和PORT delay_loop 6 #检查后端服务器的时间间隔 lb_algo wrr #定义调度方法 lb_kind DR #lvs模式 #persistence_timeout 60 #持久连接时长 protocol TCP #指定服务协议,一般为TCP #sorry_server <IPADDR> <PORT> #所有RS故障时,备用服务器地址 real_server 172.31.7.78 80 { #RS的IP和PORT weight 1 #RS权重 #notify_up <STRING>|<QUOTED-STRING> #RS上线通知脚本 #notify_down <STRING>|<QUOTED-STRING> #RS下线通知脚本 TCP_CHECK { connect_timeout 5 #无响应超时时间 nb_get_retry 3 #重试次数 delay_before_retry 3 #重试间隔 connect_port 80 #检查端口 } #定义当前主机健康状态检测方法 } real_server 172.31.7.78 80 { weight 1 TCP_CHECK { connect_timeout 5 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } } EOF

Nginx 虚拟机绑定VIP

cat <<\EOF> lvs-dr.sh #!/bin/bash #LVS DR模式初始化 LVS_VIP=172.3.7.16 source /etc/profile case $1 in start) /sbin/ifconfig lo:0 $LVS_VIP netmask 255.255.255.255 broadcast $LVS_VIP /bin/route add -host $LVS_VIP dev lo:0 echo 1 >/proc/sys/net/ipv4/conf/lo/arp_ignore echo 2 >/proc/sys/net/ipv4/conf/lo/arp_announce echo 1 >/proc/sys/net/ipv4/conf/all/arp_ignore echo 1 >/proc/sys/net/ipv4/conf/lo/arp_announce sysctl -p &>/dev/null echo "RealServer Start OK" ;; stop) /sbin/ifconfig lo:0 down /bin/route del $LVS_VIP &>/dev/null echo 0 >/proc/sys/net/ipv4/conf/lo/arp_ignore echo 0 >/proc/sys/net/ipv4/conf/lo/arp_announce echo 0 >/proc/sys/net/ipv4/conf/all/arp_ignore echo 0 >/proc/sys/net/ipv4/conf/lo/arp_announce sysctl -p &>/dev/null echo "RealServer Stop" ;; *) echo "Usage: $0 {start|stop}" exit 1 esac exit 0 EOF bash lvs-dr.sh

检查

ipvsadm -Ln

OpenStack高可用配置

主要内容

- OpenStack高可用架构介绍

- 使用Kolla部署高可用OpenStack

- Kolla工具介绍和高可用环境准备

- 部署计算节点

- 其他常用服务介绍

- 新面板Skyline

- 编排服务Heat

- 负载均衡服务Octavia

- Openstack监控

参考:

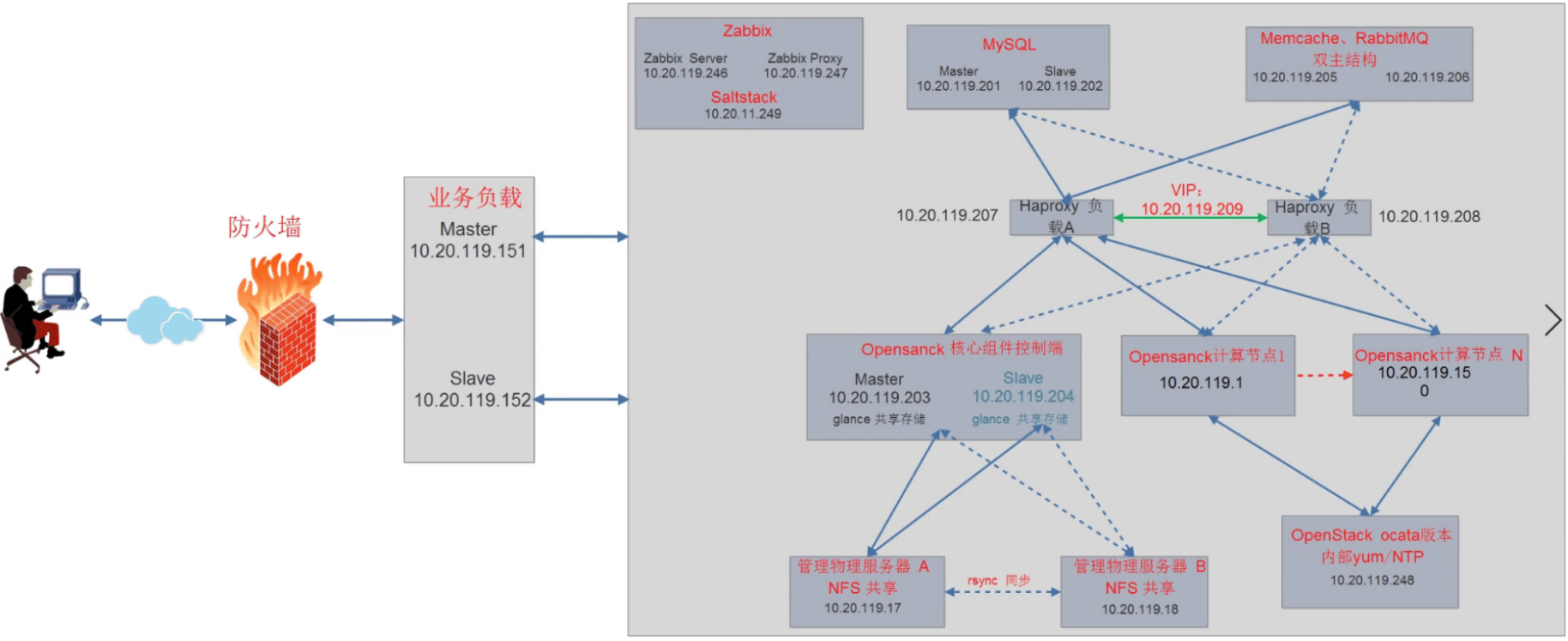

OpenStack IP规划1: 10.20.112.0/21

| 类别 | 内网 | 备注 |

| 业务VIP | 10.20.112-113.0/21 | 508个业务VIP |

| 数据库VIP | 10.20.114.0/21 | 254个数据库VIP |

| 虚拟机IP | 10.20.115.0-10.20.118.254 | 1016台业务虚拟机 |

| 物理机IP | 10.20.119.1-10.20.119.150 | 150台物理机 |

| HA-LVS虚拟机 | 10.20.119.151-10.20.119.200 | 50个IP |

| openstack服务虚拟机ip | 10.20.119.201-10.20.119.250 | 50台openstack服务虚拟机 |

| 网关 | 10.20.127.254 | 统一默认网关 |

| 10.20.119.240-246/250/251 | 交换机 | |

| 10.20.119.247 | 跳板机 | |

| 10.20.119.248 | NTP-YUM | |

| 10.20.119.249 | ansible/saltstack |

备注:后面3个IP已使用,10.20.119.252/253/254 防火墙使用

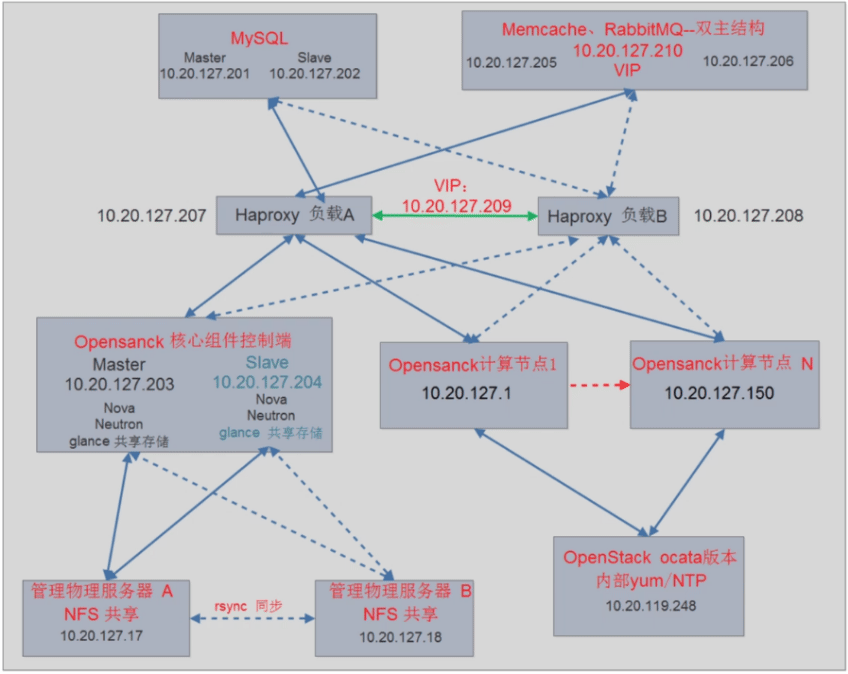

OpenStack IP规划2: 10.20.120.0/21

| 类别 | 内网 | 备注 |

| 业务VIP | 10.20.120.0-10.20.121.0/21 | 508个业务VIP |

| 数据库VIP | 10.20.122.0/21 | 254个数据库VIP |

| 虚拟机IP | 10.20.123.0-10.20.126.254 | 1016台业务虚拟机 |

| 物理机IP | 10.20.127.1-10.20.127.150 | 150台物理机 |

| HA-LVS虚拟机 | 10.20.127.151-10.20.127.200 | 50个IP |

| openstack服务虚拟机ip | 10.20.127.201-10.20.127.250 | 50台openstack服务虚拟机 |

| 网关 | 10.20.127.254 | 统一默认网关 |

| 10.20.127.240-246/250/251 | 交换机 | |

| 10.20.127.247 | 跳板机 | |

| 10.20.127.248 | NTP-YUM | |

| 10.20.127.249 | ansible/saltstack |

备注:后面3个IP已使用,10.20.127.252/253/254 防火墙使用

OpenStack IP 虚拟机网络:

| 虚拟机内网IP | 虚拟机外网IP | 虚拟机存储网IP | 物理机 |

| 10.20.0.0/24 | 10.10.0.0/24 | 10.30.0.0/24 |